I’ve already done quite an extensive write-up on the best graphics cards for LLM inference and training this year, but this time I’ve decided to compile an even more versatile resource for all of you who dabble with local and private AI software including image generation WebUI’s, AI vocal cover tools, LLM roleplay chat interfaces and much more. Let’s get straight into it!

This web portal is reader-supported, and is a part of the Amazon Services LLC Associates Program and the eBay Partner Network. When you buy using links on our site, we may earn an affiliate commission!

Who Is This List For?

Well, the first thing that I need to clarify is that this guide will work best for those of you who:

- Want to further train Stable Diffusion checkpoints or fine-tunings like LoRA and LyCORIS models.

- Need a GPU for training LLM models in a home environment, on a single home PC (again, including LoRA fine-tunings for text generation models).

- Are interested in efficiently training RVC voice models for making AI vocal covers, or fine-tuning models like for instance xtts, for higher quality voice cloning.

- …and are looking for a new graphics card that will also perform the best for model inference in many different contexts, including the ones mentioned above.

If you’re more geared towards training custom AI models from the standpoint of a machine learning enthusiast or big data engineer, you will also find these GPUs quite useful, albeit I won’t say that there aren’t some other, possibly more interesting solutions for you, such as running multiple graphics cards on one system in an SLI configuration, this however, is a topic for a whole new article. So now, let’s get to one last thing that needs to be said before we get to the list itself.

Which Parameters Really Matter When Picking a GPU For Training AI Models?

The amount of VRAM, max clock speed, cooling efficiency and overall benchmark performance. That’s it. Let’s quickly explain all of these, and why do they matter in this particular context.

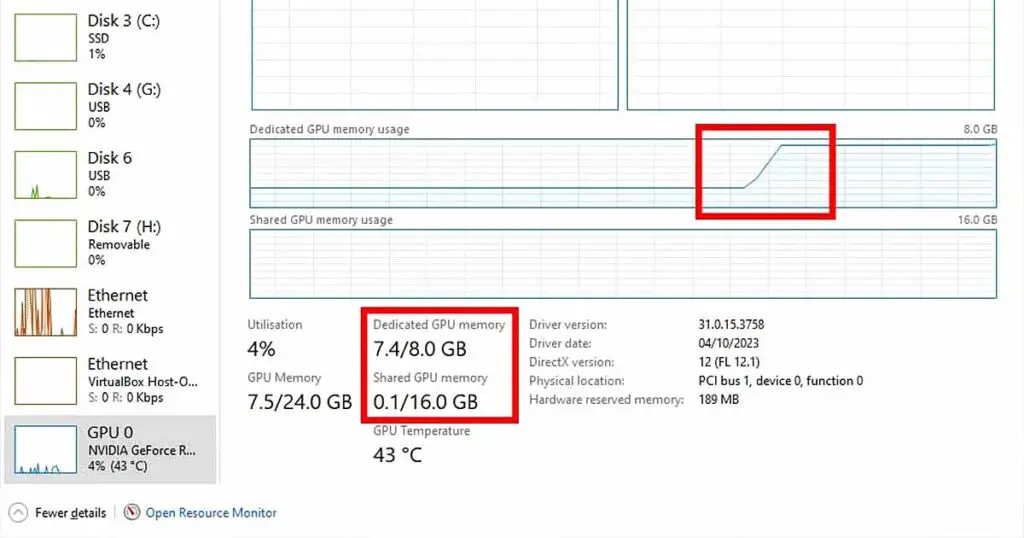

- VRAM – For training AI models, fine-tuning and doing any calculations on large batches of data at the same time efficiently, you need as much VRAM as you can get. In general, you don’t want less than 12GB of video memory, and the 24GB models would be ideal if only you can afford to get them. While for instance when training LoRA models for Stable Diffusion having less VRAM can just make the process much slower than it would be if you were able to process larger data batches at once, when using open-source large language models on your system the lack of VRAM can completely lock you out from being able to use many larger sized higher quality text generation models.

- Max clock speed – This is pretty self-explanatory: the faster the GPU can make calculations, the faster you can get through the model training process, however this takes us straight to the next point.

- Cooling efficiency – Fast calculation equals generating a large amount of heat that needs to be dissipated. Once the GPU hits its thermal limit and isn’t able to cool itself quickly enough, the thermal throttling starts – the main clock slows down, so as not to cook up your precious piece of smart metal. My take is: try to always aim for 3 fan GPUs with cooling efficiency benchmark proof. In my experience when it comes to graphics cards mentioned in this list (which are pretty much the top shelf), cooling is hardly ever an issue.

- Overall benchmark performance – Once again, whenever in doubt, check the benchmark tests which luckily these days are available all over the internet. These are useful mostly when comparing two high end cards with each other.

These very same things do matter equally for AI model inference, so in your case, making use of the generative AI software to quickly get your desired outputs.

With that out of the way, let’s get to the actual list. Feel free to use the orange buttons to check the prices of the mentioned GPUs. If you’re interested in used models, eBay is one of the best places to get an idea of the current price of a second-hand GPU unit, so I’ve included these links as well.

Oh, and by the way, if you’re wondering why does this list not include AMD cards, the reason is that AMD, while very much cost-effective in their GPU department, are still quite behind on NVIDIA when it comes to AI software compatibility, as sad as I am to say that, being a covert AMD fanboy, especially when it comes to gaming. More about that in the very last paragraph, under the list itself.

1. NVIDIA GeForce RTX 4090 24GB – Performance Winner

- Excellent performance.

- The best GPU model for local AI training. li>Comes in a 24GB variant – which is about as much VRAM as you can get.

- For now, no other models beat the 4090 in GPU benchmark scores.

- The price is quite high.

- The performance jump from the 4080 is also high, although it’s debatable whether it’s worth paying this much for.

The NVIDIA GeForce RTX 4090 is right now unbeatable when it comes to speed and it comes with 24GB of VRAM on board, which is currently the highest value you can expect from consumer GPUs. However.

It’s pricey, and as some (including me) would say, overpriced. This is just how it is with the NVIDIA graphics cards these days, and sadly it’s not likely to change anytime soon. With that out of the way, it has to be said that if you have the money to spare, this is the go-to card that you want to go for if you want the absolute best performance on the market, including the highest amount of VRAM accessible by regular means.

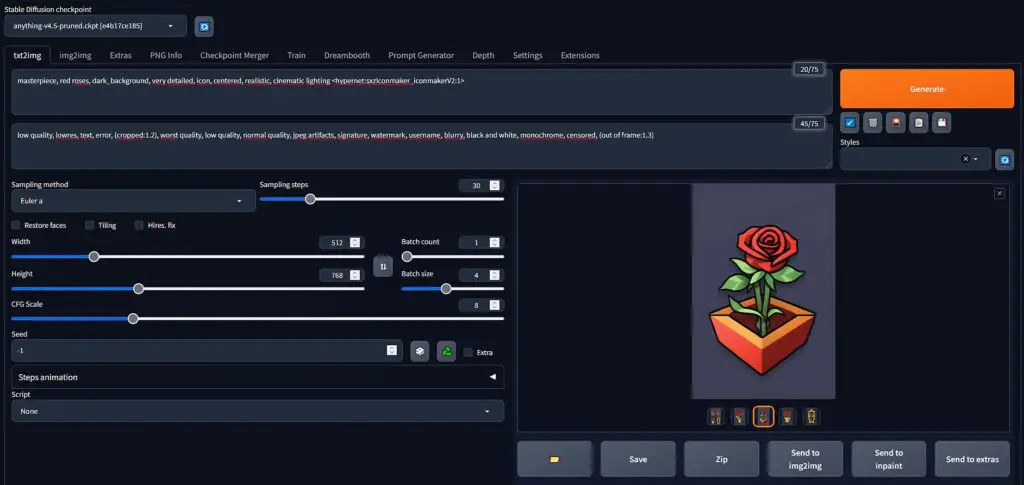

The 4090 will be able to grant you near instant generations when it comes to most basic locally hosted LLM models with the right configuration, local Stable Diffusion based image generation software, and best performance when it comes to model training and fine-tuning.

Performance-wise, it’s the best card on this list. Cost-wise, well, some things never change. Regardless, it wouldn’t be right not to begin with the RTX 4090, which is currently the most sought-after NVIDIA GPU on the market. With that said, let’s now move to some more affordable options, as there are quite a few to choose from here!

2. NVIDIA GeForce RTX 4080 16GB

- Performance still above average.

- Price is just a little bit lower.

- You can find good second-hand deals on this one.

- The price is still far from ideal.

- Noticeably worse benchmark scores compared to the 4090.

- “Only” 16 GB of VRAM on board.

When it comes to the NVIDIA GeForce RTX 4080, in terms of sheer performance it takes the second place on my grand GPU top-list, right after the 4090. It does this however, with a much lower amount of available video memory on board.

The 16GB of GDDR6X VRAM is the highest amount of video memory you can get when buying the 4080, and while it certainly isn’t the dreaded 8GB of VRAM on older graphics cards, you could do better at a much lower price point, for instance with the RTX 3090 Ti 24GB, which I’m going to talk about in a very short while.

Again, if you have the money to spare and you’re already looking at GPUs in the higher price range, if I were you I would rather go for the 4090, or scroll down this list to find more cost-effective options which as it turns out, don’t fall that far from these two top models when it comes to their computational abilities.

Overall, the 4080 is still a card that is very much worth looking into, even more so if you can find it used for a good price (for instance here on Ebay). But there is one more proud representative of the 4xxx series I want to show you, one that is going head to head with the already mentioned models, while being much more reasonably priced.

3. NVIDIA GeForce RTX 4070 Ti 12GB

- Still a pretty good choice performance-wise.

- There are lot of great deals for it out there.

- 12GB of VRAM can be too little for some use cases and applications.

- Less efficient than the 4080 and the 4090 computation-wise.

Now comes the time for the NVIDIA GeForce RTX 4070, taking the 3rd place on the podium. Is 12GB of VRAM too little? Here is what I have to say about it.

As I’ve already said, if you’re planning to train AI models and in general make your GPU do complex math on large data batches, you want to have access to as much video memory as possible. However, what also matters here, is the computation speed. And quite surprisingly when it comes to that, the 4070 Ti again does not fall very far from the first two cards on this list, in the end being approximately ~20% slower than the GeForce 4080.

While 12GB of VRAM certainly isn’t as much as the 24GB you can get with the 4090 or the 3090 Ti, it doesn’t really disqualify the 4070 Ti here. In my area, you can get the 4070 Ti for much less than both the 4080 and 4090, and that’s primarily what made me consider picking it up for my local AI endeavors. Still there are a few other options out there, including my personal pick, which is the next card on this list.

4. NVIDIA GeForce RTX 3090 Ti 24GB – The Best Card For AI Training & Inference

- Best price to performance ratio.

- 24GB of VRAM on board.

- Still a relatively recent and very much relevant card.

- Mentioned the most online when it comes to GPUs for local AI software.

- Many great second-hand deals.

- Not as powerful as the 4xxx series.

- The 24GB of VRAM make it effectively more expensive than the 4070 Ti (but it’s well worth the cost).

Here it is! NVIDIA GeForce RTX 3090 Ti, is definitely my winner when it comes to the most cost-effective and most efficient GPUs for local AI model training and inference this year. Here is why.

When it comes to performance, the 3090 Ti is very close to the 4070 Ti all while having substantially more VRAM on board (12GB vs 24GB) and being much more cost-effective than the NVIDIA 4xxx series, especially if purchased on the second-hand market (once again, keep an eye on the Ebay listings).

You can learn more about the 3090 here: NVIDIA GeForce 3090/Ti For AI Software – Is It Still Worth It?

The best thing about that, is that many people upgrading to the newer GPUs this year have started to sell their older graphics cards among which the 3090 Ti was one of the most popular models from the previous gen. There are many great deals out there, especially when it comes to local marketplaces. The 3090 series are also mentioned in almost every online thread when the topic of best GPUs for local AI comes up. And for good reasons.

The 24GB of VRAM at this price point is pretty impressive, and you won’t find any card like this in the current NVIDIA lineup when it comes to the price to performance ratio. If you’re looking for a card with the most VRAM you can get in a commercial NVIDIA GPU for now, and without the steep price of the new RTX 4090, the 3090 Ti (alongside with the original 3090 which is just a little bit behind but features the same amount of VRAM) are your best bets.

5. NVIDIA GeForce RTX 3080 Ti 12GB

NVIDIA GeForce RTX 3080 Ti alongside with the 3090 Ti lie very close together on the performance chart, albeit the 3080 features only 12GB of VRAM, and is a little bit more power hungry than the 3090 series.

Just because of that, this is a pretty short description. If you find the 3080 Ti used in a good state and for a really good price, go for it. If however getting a 3090/3090 Ti is not a problem for you, it would be a better decision in the end. You get twice the amount of VRAM and better performance after all. With that said, let’s get to the best budget choice on the list – the much older yet still quite reliable RTX 3060.

6. NVIDIA GeForce RTX 3060 12GB – If You’re Short On Money

- Cheap & reliable.

- 12 GB of VRAM for this price is a very nice deal.

- New ones can be found for less that $300 in a 2-fan configuration.

- Although this card is certainly not “slow” by modern standards, it’s still the least powerful one on this list.

- If you also plan to play AAA games using the 3060, it won’t last you as long as the remaining listed GPUs.

The NVIDIA GeForce RTX 3060 with 12GB of VRAM on board and a pretty low current market price is in my book the absolute best tight budget choice for local AI enthusiasts both when it comes to LLMs, and image generation.

I can already hear you asking: why is that? Well, the prices of the RTX 3060 have already fallen quite substantially, and its performance as you might have guessed did not. This card in most benchmarks is placed right after the RTX 3060 Ti and the 3070, and you will be able to most 7B or 13B models with moderate quantization on it with decent text generation speeds. With right model chosen and the right configuration you can get almost instant generations in low to medium context window scenarios!

Once again, if we’re talking about the absolute lowest price GPU you could use for training and fine-tuning smaller models on your PC, getting the RTX 3060 is most probably the answer for you. Also, as always check out the latest second-hand deals for this card over on eBay – you can find an even better offer this way.

Hey, What About AMD Cards? – The Promised Addendum

As I’ve already mentioned in my local LLM GPU top list, AMD is still a little bit behind on NVIDIA when it comes to both manufactured GPU features, and support for various modern AI software (lack of CUDA cores), although it’s still way cheaper on average.

If you’re not a brave tinkerer at heart, and you don’t welcome lots of potential troubleshooting, if I were you, I would go with NVIDIA – at least for this year. I do hope that after some time, I will be able to proudly update this paragraph, and the market dynamics will shift. For now, I’ll stick with NVIDIA with my wallet remaining way less happy than me after finally benchmarking my new GPU. Until next time!

You might also like: 7 Benefits Of Upgrading Your GPU This Year – Is It Worth It?