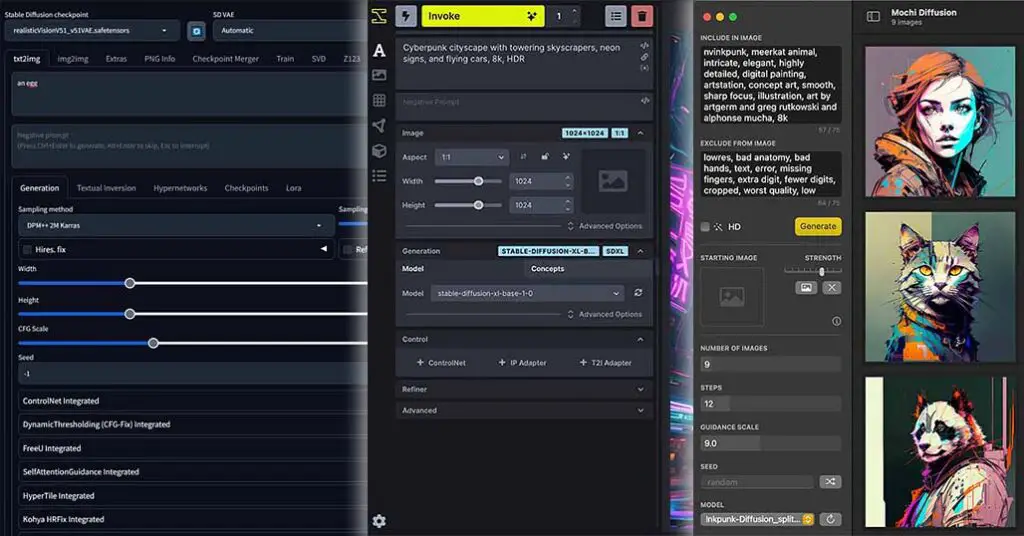

Having already compiled a benchmark-backed list of the absolute best GPUs you can get for locally hosted LLMs I figured it’s time to put together such a list for the most popular local AI image generation models such as base Stable Diffusion, Stable Diffusion XL, and FLUX. Here are all of the most powerful (and some of the most affordable) GPUs you can get for running your local AI image generation software without any compromises. Let’s get to it!

Things That Matter – GPU Specs For SD, SDXL & FLUX

While for local large language models, as you might already know, you need a lot of VRAM and the main GPU clock speed doesn’t matter that much, the diffusion-based image generation models like all flavors of Stable Diffusion, FLUX, and Qwen Image are a little bit different in that regard.

Spec-wise, diffusion-based models like Stable Diffusion, FLUX, and Qwen first depend on the card’s max clock speed and the memory bandwidth for fast image generation, and second on the card’s VRAM capacity for generating larger batches of images, or generating images in higher resolutions.

According to the benchmarks comparing the Stable Diffusion 1.5 image generation speed between many different GPUs, there is a huge jump in base SD performance between the latest NVIDIA GPU models such as the RTX 4090, 4080 and 3090 Ti and pretty much every other graphics card from both the 2nd and 3rd generation, which fall very close to each other in terms of how many basic 512×512/768×768 images they can generate in one minute.

This is why in this ranking, we will focus on only the best performing cards without getting into the territory of the RTX 2xxx series, as while these are also reasonably powerful GPUs, they are overall irrelevant to consider as an upgrade in the current year. If you’re curious about AMD cards which are not present in this list, we will get to that in a second.

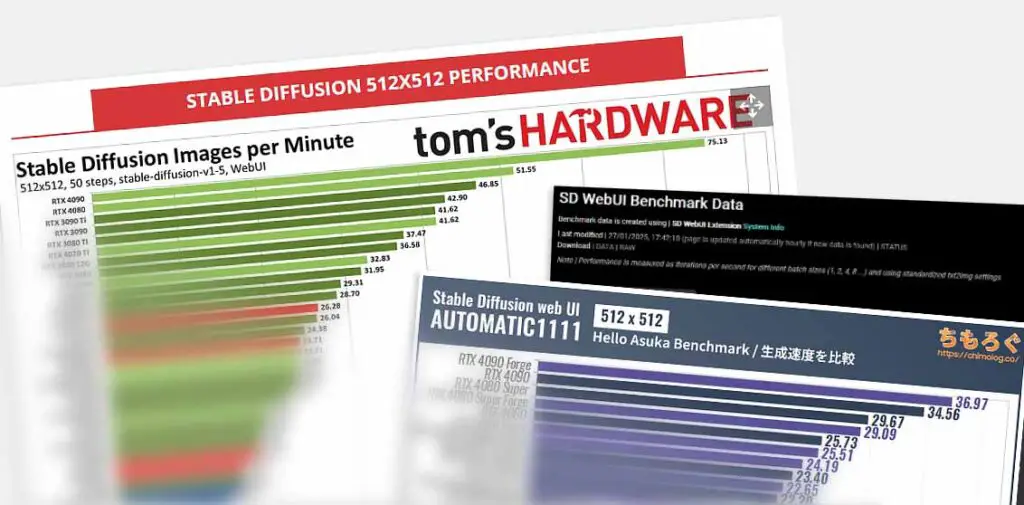

Reliable Stable Diffusion GPU Benchmarks – And Where To Find Them

An article comparing and proposing different GPUs is not worth much without clear and exact reference to real-world performance tests and benchmarks. When picking a graphics card best for using Stable Diffusion AI image generation software locally, the most important thing is to assess how fast your chosen card really is in comparison to its direct rivals in terms of base SD and SDXL model inference when generating differently sized images. And that’s exactly what we’re doing here.

As it turns out, there aren’t many Stable Diffusion benchmarks featuring multiple different GPUs out there, mainly because not many people can afford to test a meaningful amount of different high end cards in a relevant benchmarking setup without spending thousands of dollars on the hardware

Still, there are a few resources out there that can give you the idea of which cards are currently the best performance wise. Here are the ones I found to be the most useful and relevant:

- The Chimolog Stable Diffusion GPU benchmark – an excellent resource comparing about 40 different NVIDIA GPUs in a few quality image generation benchmark tests. Some AMD cards are present in the lineup. Additionally, the author has tested the cards both using the base SD Automatic1111 WebUI, and the upgraded fork of the project – SD WebUI Forge. Note: this is a Japanese website with no English translation available. You can use tools like Google Translate to read it if you don’t know that language, or rely solely on the supplied comparison charts.

- Tom’s Hardware SD GPU comparison – outdated when it comes to AMD graphics cards data (all AMD GPUs tested using DirectML instead of ROCm on the units on which it’s now available), but still a good point of reference for NVIDIA cards.

- Vladmandic SD WebUI Benchmark Datasheet – a large database powered by the System Info tab extension for the SD Automatic1111 WebUI, gathering info from the consenting extension users. It shows both the GPU model and general specs of the system used for generation, as well as the generation speed in iterations per second – it/s (the more, the better). One of the most up-to-date resources of this kind.

As you can see, while there aren’t many comparative resources of this kind, these should be just enough to give you an idea of how things look from the practical standpoint. These exact benchmarks were taken into account when compiling the list below.

Remember that the benchmark results for base Stable Diffusion models will generally also be representative of the SDXL, FLUX, and Qwen Image performance. Still, you should note that these kind of diffusion models can greatly benefit from more VRAM on your GPU – much more than the base SD 1.5. This is the case especially with more advanced workflow contexts such as checkpoint and LoRA model training. In the next paragraph, you’ll learn exactly why.

What About VRAM? – How Much Do You Need For Stable Diffusion?

- Core Speed and CUDA Cores: The faster the GPU, the better the image generation speeds. The higher number of CUDA cores in NVIDIA GPUs is generally directly proportional to the rise in performance with Stable Diffusion inference and model training.

- VRAM: Minimum of 6-8GB for basic image generation without many initial setting tweaks; more needed for advanced workflows, like running refiners, switching models efficiently, generating larger image batches, or training LoRA models.

For local AI image generation you generally want the fastest GPU you can get. Yes, that’s it. Of course it goes without saying that with NVIDIA graphics cards, the differences in speed between subsequent card models can often be pretty minute, while the differences in price are generally much less consistent.

This is why on this list I’ve included, besides the top players in the game, cards that you can get nice deal for when buying them used, and cards that in general while they are not the best of the best, are just enough for fast and effortless local image generation without breaking the bank. The list is ordered from the best and fastest options in the beginning, to the budget options at the very end.

When it comes to the video memory (or VRAM in short), it also does matter, but more than 8GB of VRAM will generally not make the image generation speed for Stable Diffusion and Stable Diffusion XL based models any better, unless you’re often switching between different models, or using larger batch sizes while generating a lot of images at the same time in parallel.

Where large amounts of VRAM do matter a lot is efficiently switching between different large SDXL models, using SDXL models alongside refiners without model switching halfway during the image Generation process, utilizing the newest FLUX models, as well as training Stable Diffusion checkpoints and LoRA models and running local video generation models.

Now let’s see what exactly you can and cannot do using graphics cards with different amounts of VRAM on board.

8GB, 12GB and 16GB+ Graphics Cards for Stable Diffusion

Suppose you want to go the cheapest possible route, and stick with no more than 8GB of VRAM (which is not really recommended anymore). Here is a list of things that you could, and couldn’t easily do:

- You can easily generate images using Stable Diffusion and Stable Diffusion XL models.

- You won’t be able to run FLUX models locally, and with Qwen Image you will be limited to higher quantizations of the base model with lower quality outputs.

- You will be locked out from most local video generation models that are hitting the open-source local generative AI scene lately.

- You won’t have the ability to locally train SDXL checkpoints.

- You won’t be able to efficiently train larger/more complex LoRA models with larger training batch settings.

Personally, I’ve been using an 8GB VRAM card (the RTX 2070 Super) for most of my tests here on the site for quite a long time, including when making the full Fooocus WebUI guide I mentioned earlier using a laptop with an RTX 3050 6GB, and reaching image generation times of about 30 seconds for one 1152×896 px image in the “Speed” mode as seen below.

| 1152x896px image size | RTX 3050 6GB (Laptop) | RTX 2070 SUPER 8GB (Desktop PC) |

|---|---|---|

| Quality | 70.04s | 50.25s |

| Speed | 38.04s | 27.36s |

| Extreme Speed | 11.45s | 7.49s |

| Lightning | 9.51s | 6.14s |

| Hyper-SD | 10.16s | 6.80s |

You can learn much more about the Fooocus WebUI here: How To Run SDXL Models on 6GB VRAM – Fooocus WebUI Guide [Part 1]

With all of the listed cards with 12GB of VRAM and more, you will be able to:

- Generate images using the Stable Diffusion and Stable Diffusion XL (SDXL) models.

- Load both a base SDXL model and a compatible refiner into the GPU memory at the same time.

- Load more than one SD/SDXL model into the GPU memory for quick model switching.

- Generate images using FLUX models, as well as higher quality Qwen Image checkpoints.

- Efficiently train medium sized LoRA models with larger training batch sizes.

All of the cards with 16GB of VRAM and more will be able to do all of the things mentioned above and more, and will also leave you more leverage for fine-tuning checkpoints and LoRA models faster and more efficiently.

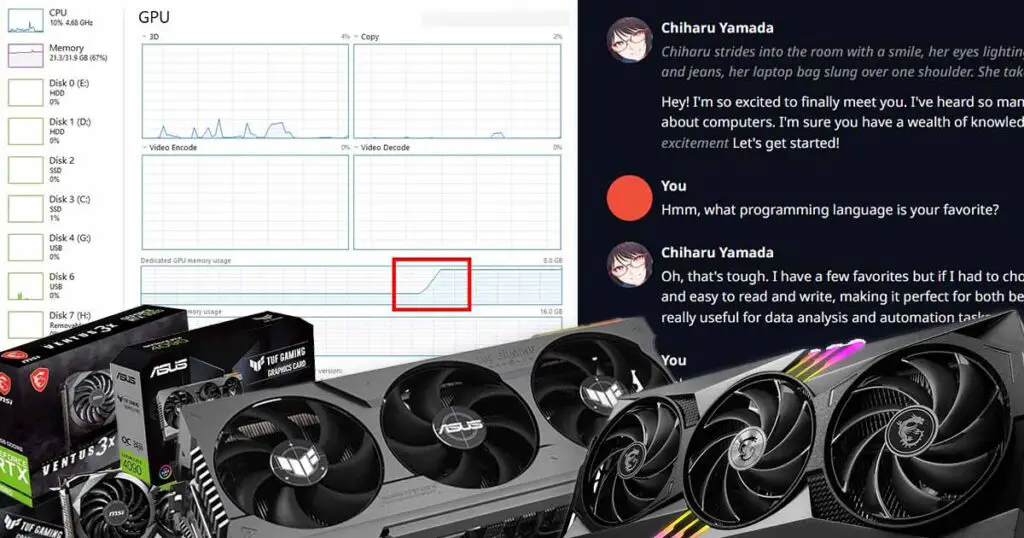

I feel like it’s also important to mention that if you’re interested in other applications and uses of local AI software, you will greatly benefit from a large amount of video memory on your GPU. For instance, running large language models locally is arguably one of the most useful and interesting ways to play around with AI privately on your system and as you can learn here, in my list of the best GPUs for local LLMs, and it pretty much requires as much VRAM as you can throw at the process for the best results.

What About AMD Graphics Cards?

Although AMD has done some significant progress when it comes to the AI software support this year, and the technologies such as ROCm, ZLUDA, DirectML and many others are still actively developed to ensure better compatibility between the NVIDIA with CUDA and the AMD hardware, in the AI image generation sphere and more specifically diffusion models, they are still more than a little bit behind.

According to the benchmarks, such as the ones mentioned above prepared by Tom’s Hardware and Chimolog, most AMD GPU’s still fall far behind NVIDIA’s graphics cards in terms of image generation speed, both with base Stable Diffusion and SDXL models. For the FLUX models, the situation is exactly the same. In comparison, in the field of locally hosting large language models, the newest AMD graphics cards are in a position that is just a little bit better.

When it comes to the software support, if you’re a beginner and you’re not used to tinkering you might face quite a few issues with setting up many of the local AI image generation tools with an AMD graphics card. While this will probably change as time goes by, for now this is how things are.

If you’re interested in which Stable Diffusion WebUIs actually do have support for AMD GPUs, you can check out my list of all of the most popular local SD software here: Top 11 Stable Diffusion WebUIs – Fooocus, Automatic1111, ComfyUI & More

The List – Cards Ordered By Performance

Here are my best picks for graphics cards for local AI image generation with base Stable Diffusion, SDXL FLUX, and Qwen Image. The GPUs are ordered by their performance according to the benchmarks linked above, from the best and fastest ones with the highest amount of video memory, to the less powerful but still capable budget options. Enjoy!

1. The NVIDIA RTX 5090 32GB & The Entire 5th Generation

The newest NVIDIA RTX 5090 and the whole 5th generation of NVIDIA GPUs is currently a set of the best performing NVIDIA GPUs your money can get you. Still, the image generation benchmarks for these cards are only slowly rolling in, for now indicating a ~30% rise in image generation speed on the RTX 5090 in comparison to its predecessor – the RTX 4090. One UL Procyon software benchmark concluded with the score of about 7 second per one image generated using FLUX, compared to around 10 seconds on the 4090.

The jump in overall performance on the 5xxx cards is noticeable in comparison to the 4th gen, and while discussing best GPUs strictly for AI image generation I feel like it’s necessary to mention these cards because of their much higher memory bandwidths and significant rise in CUDA cores count across the whole lineup.

If you absolutely have the money to spare and you’re not upgrading directly from a 4xxx card, there really is no reason not to go for the RTX 5090 here. If you’re on a tighter budget the NVIDIA RTX 5080, 5070 Ti, and the base 5070 will grant you pretty much the same upgraded image generation performance with less VRAM. That’s pretty much it.

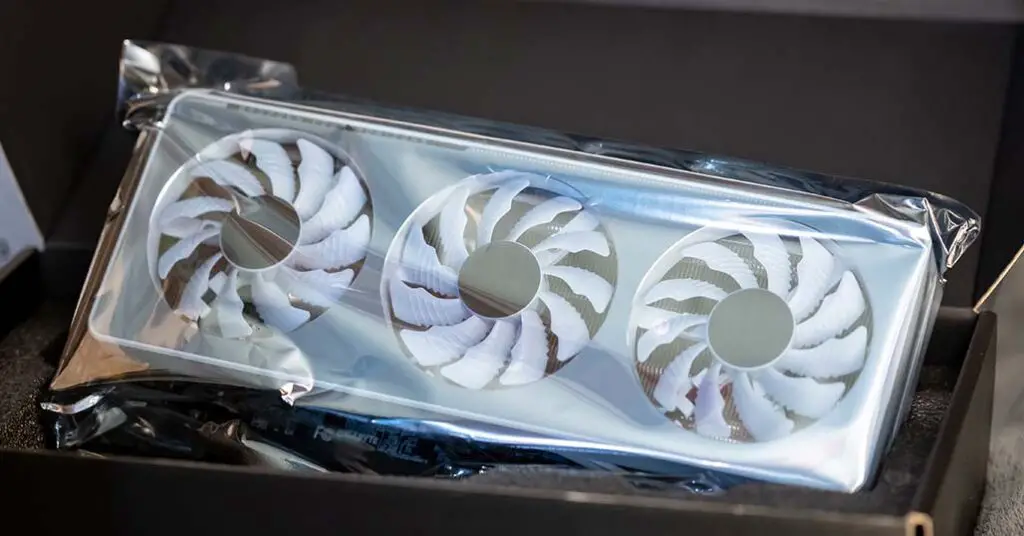

2. NVIDIA GeForce RTX 4090 24GB

The NVIDIA GeForce RTX 4090, while quite obviously pretty expensive, is currently the best choice if we’re looking at the inference speed compared to the rest of the NVIDIA RTX and AMD GPU lineup. Aside from the newest 5th generation GPUs hitting the market with the end of January, this is one of the most powerful cards you can get.

When compared to the next most popular choice listed further down, the RTX 3090 Ti 24GB, it’s roughly two times as fast when generating both 512×512 and 512×768 images. The RTX 4090 can, with the right configuration and settings reach speeds from around 20-30 it/s, and more with further optimizations. Really worth looking at if you need the absolute best hardware.

3. NVIDIA GeForce RTX 4080 16GB (+RTX 4080 Super)

The NVIDIA GeForce RTX 4080 comes second after the 4090 performance-wise. Compared to its older brother, it only has 16GB of VRAM on board.

Approximately 25% slower than the RTX 4090 both for SD and SDXL image generation, it’s the second most powerful card in the 4th generation of NVIDIA RTX GPUs. Compared with the 4090 in the previously mentioned Tom’s Hardware benchmark it can “only” generate 20 768x768px images with 50 steps in one minute, while the 4090 maxxes out at almost 30 pictures.

The NVIDIA RTX 4080 Super is generally very close to the base 4080 looking at the performance benchmark scores, and the small jump in performance isn’t very significant. Technically speaking, it only has 5% more CUDA cores on board (9728 vs. 10240), and only 0.04 Ghz faster max boost clock speed (!). As the 4080 Super also features 16GB of VRAM, in my honest opinion, getting it over the base 4080 for whatever reason is simply not worth it.

4. NVIDIA GeForce RTX 4070 Ti Super 16GB

The NVIDIA GeForce RTX 4070 Ti Super is pretty much a direct upgrade to the base RTX 4070 Ti performance with more VRAM and a wider memory bus.

The 4070 Ti Super has 16 GB of GDDR6X video memory and with a 256-bit memory bus (compared to the 192-bit connection on all of the other 4070 models), and it features slightly more CUDA cores compared to its predecessors from the 4070’s line. All this with the same power requirements and the same clock speed.

While both the base RTX 4070 Ti and the Ti Super are very much reliable and fast GPUs, the Super is still considered better because of the extra VRAM and higher memory bandwidth. While in gaming the performance boost won’t be that noticeable, for video memory intensive applications, it certainly will.

In the Ayaka Benchmark in the Chimolog tests generating a 512×768 px image using the base Stable Diffusion model in the Automatic1111 WebUI on the RTX 4070 Ti Super took about 15.6 seconds [16.4 it/s]. Compared to the base 4070 Ti (16.4 seconds [15.44 it/s]), the 4070 Super (18.3 seconds [13.40 it/s]), and the 4070 (21.1 seconds [11.70 it/s]), you can see the difference between the base 4070 model and our goto choice is quite substantial.

5. NVIDIA GeForce RTX 4070 Ti 12GB (and the RTX 4070 Super)

The NVIDIA GeForce RTX 4070 Ti is the second most powerful card from the 4070 series. While it only has 12GB of VRAM compared to the 16GB on the 4070 Ti Super, it does offer pretty much the same performance as the 4070 Ti Super in the aforementioned Stable Diffusion benchmark tests.

The bonus video memory size and bandwidth in the Ti Super is a neat addition for training SD checkpoints and LoRA models locally, but it’s not at all necessary if you just want to generate images using SD, SDXL or FLUX. This makes the 4070 Ti a great pick, especially if you catch a good deal.

To sum things up, the AI performance in the 4070’s lineup should go like this: 4070 Ti Super > 4070 Ti > 4070 Super > 4070 – from the best, to worst performing cards. Keep in mind that all of them are very capable GPUs, and the differences between them when it comes to AI image generation are relatively small, as we’ve already established.

Let’s move on to one of the most popular picks of the last year both for locally hosted LLMs, and local AI software in general.

Interested in best AMD graphics cards instead? Check out this list here: 6 Best AMD Cards For Local AI & LLMs This Year

6. NVIDIA GeForce RTX 3090 Ti 24GB – Still Going Strong

The NVIDIA GeForce RTX 3090 Ti is a card that still surprises me to this day with its popularity among the local AI software enthusiasts. It’s also the one I swear by simply because of its price-to-performance ratio. It comes right after the 4070 Ti and the 4080 terms of its raw benchmark performance.

It the Tom’s Hardware benchmarks, the RTX 3090 Ti was able to generate 15.89 768×768 px images in one minute using the base Stable Diffusion 1.5 model. The RTX 4080 finished the same test with only 3 images mode (20).

In the previously mentioned Ayaka benchmark test over on the Chimolog website, the base 3090 finished generating the test 512×768 px image in (16.5 seconds [12.76 it/s]), which positioned it right after the RTX 4070 Super (15.6 seconds [13.40 it/s]).

The performance of the 3090 and the 3090 Ti in terms of AI image generation only is pretty much the same, with the base 3090 being less pricey and having two times as much VRAM on board making it a much better choice.

All this is a great deal considering that the price of this card has already fallen quite substantially, and that it can still be found used for even better prices, for instance over on Ebay. If you plan to upgrade from any 2xxx gen cards, this is still one of the best choices.

Want to know more about this one in relation to the most popular local AI applications? I’ve got you covered: NVIDIA GeForce 3090/Ti For AI Software – Is It Still Worth It?

7. NVIDIA GeForce RTX 3080 Ti 12GB

The NVIDIA GeForce RTX 3080 Ti comes directly after the 3090 and the 3090 Ti. Less, VRAM, a little bit less horsepower, but it’s still a card that’s worth looking at if you want a good GPU for local AI image generation without breaking the bank.

Being about 15% slower than the 3090 Ti isn’t that much of an issue in our use case. If you can stomach not having the full 24GB of VRAM on your card, you can easily pick it over the 3090’s.

Keep in mind that as we’ve said before, more than 12GB of VRAM for now won’t really be of much use to you if you don’t plan to get into some more advanced image generation techniques and model training.

Nothing more to say here really, the 3080 Ti is a great card, and it’s prices are much more budget-friendly than the previous propositions from this list. With that said, let’s move over to our budget choice – the least expensive card in our ranking!

8. NVIDIA GeForce RTX 3060 12GB – My Budget Pick

You can easily get this one for under $300. The NVIDIA GeForce RTX 3060 is a budget choice among the relatively recent GPUs suitable for pretty much all local AI applications, including fast local image generation using SD, SDXL and FLUX.

While it’s by no means a speed demon, and it does fall behind all of the cards in this list, with 12GB of VRAM and decent performance in Automatic1111, it’s better than virtually every card from the NVIDIA 2xxx series.

Whether you’ve thinking of a small upgrade for a much older system, or you just want a GPU that will enable you to easily get into local AI image generation, this one is a perfectly good pick. And of course you can probably get it for even less, settling for a second-hand unit in a good condition.

The Conclusion – And My Private Favorites

Phew, that was quite a ride. With the RTX 4090 24GB being the most expensive but still reasonable pick for more advanced Stable Diffusion, SDXL FLUX, and Qwen image generation workflows and model training, the RTX 3090 Ti 24GB still being one of the most popular choices simply because of its outstanding price-to-performance ratio, and the RTX 3060 12GB being among the best budget entry points to local AI I feel like we’ve made the most out of this list.

If you would ask me, I’m all about highest possible performance without breaking the bank. With that said, my personal choice still remains a second-hand RTX 3090 Ti in good working condition, both as a first GPU for Stable Diffusion and other local AI applications, and as an upgrade from the 2xxx NVIDIA card lineup.

With that said, I hope that this ranking was helpful to you, and thank you for reading. With all this you should be able to settle on a GPU that you won’t regret. Until next time!