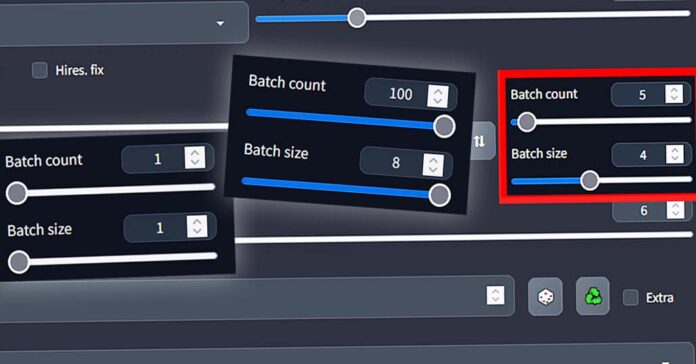

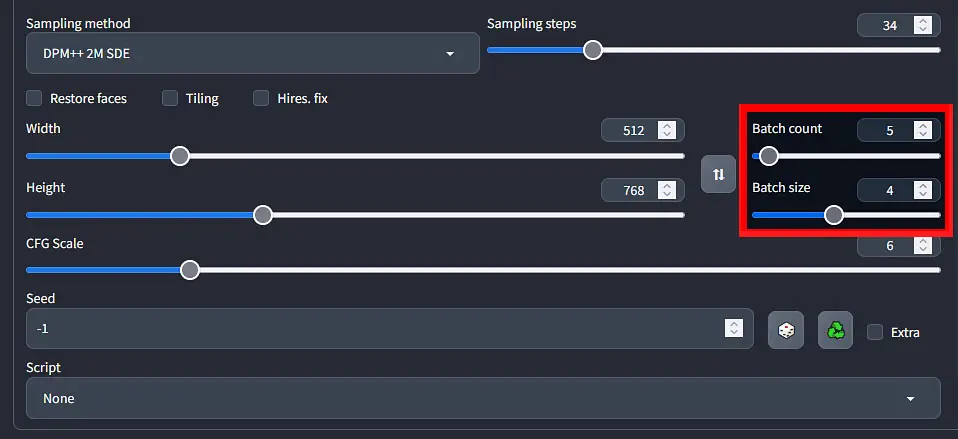

WebUI, Google Collab, CLI, whatever means you use to generate images using Stable Diffusion, batch count and batch size are one of the most important settings to know about! Let’s first quickly explain what are these parameters responsible for, and then figure out together what values you should set yours for maximum GPU efficiency and successful and blazing fast image generation!

What Is Batch Size In Stable Diffusion Terminology?

What is batch count and size? The best way to explain that is to start with the batch size.

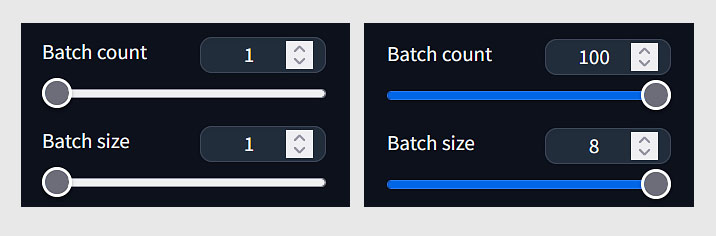

Batch size is essentially a value that defines the amount of images to be generated in one go. Pictures that are being generated in one batch are processed in parallel. Because each separate image in the batch needs to be processed in real time, and at the same time, the larger your batch size is, the more VRAM you will need to have.

VRAM is essentially your graphics card unit (GPU) internal fast-access memory separate from your main system RAM. Most modern GPUs have at least 8-24 GB of VRAM, although many older graphic card models can also feature less than 4 GB of RAM,

Having less than 8GB of VRAM can sometimes pose a problem, especially when dealing with larger AI models that have to be loaded into memory (like when it comes to most local LLMs for generating GPT-style text outputs), or more complex model training processes.

When it comes to using Stable Diffusion, 8GB of VRAM is plenty, unless you plan to generate larger batches of images. We’ll go into all the benefits of setting the batch size to higher values in a short while.

So, the larger you’ll set your batch size, the more images will be generated in parallel and the more VRAM the image generation process will consume.

What happens if you set your batch size too high? Well, sometime during the image generation process your graphics card will run out of operational memory, you will be faced with a out-of-memory error such as “CUDA out of memory” on NVIDIA graphics, and the image generation process will stop and fail.

In general, setting the batch size setting to higher values will lengthen the generation process a bit, as more image data needs to be processed.

Let’s now proceed to explain the equally important batch count setting that we will get into next.

What About Batch Count Then?

Batch count is a setting that declares the number of batches of images you want to generate. The total number of images generated will be dictated by your set batch size multiplied by your chosen batch size.

For example, if you set your batch size to 4 images and your batch count to 2, after the generation process finishes without any errors, you will get 8 images in the end.

While as we’ve mentioned before images in one batch are processed in parallel, the individual batches are processed one after another. This means that if you set your batch size to 4 and your batch size to 2, first 4 images will be generated in parallel, and only then another generation of next 4 images will start.

Now you might naturally ask whether generating say 4 images in one batch is faster, or maybe generating 4 batches consisting of 1 image would be more efficient.

The answer is generally that in general you should set your batch size setting first, and use the batch count setting only in case you run out of VRAM during generation and you need to generate more images at one time. This way you can ensure the best time efficiency of the image generation process. Let’s get into more important details!

How To Best Set These Values? – GPU & Time Efficiency

So let’s revise. Images generated in one batch are processed in parallel, and individual image batches are processed one after another.

- Setting Batch Size to higher values will use up more VRAM, but will be a little bit more efficient when it comes to the overall image generation time when it comes to larger numbers of images generated at one time.

- Setting Batch Count to higher values and Batch Size to lower values will free up more of your VRAM during image generation, but also limit the overall performance when it comes to generating larger amount of pictures at one time.

As you will see in a short while, when generating larger images, you might have to balance the batch size and batch count settings to avoid getting out-of-memory errors during image generation.

Does Either Batch Size or Batch Count Affect The Image Generation Speed?

In general comparing the time differences between generating n images in one single batch and n batches consisting of one image always gives better time efficiency scores to the first option, albeit not by much when generating a few (so around ~1-5) images at one time. The difference is the more noticeable, the more images you attempt to generate in one go using and comparing both methods.

Therefore, the best strategy, granting you the best overall GPU efficiency would be to set the batch size first and only then when you run out of operational VRAM during the image generation process, use the batch count setting as a multiplier to generate more images in one go.

Batch size and batch count settings should not affect the generated image quality at all, and when it comes to images generated in one batch, every other image that is generated should be generated using a seed number one higher than the previous one.

One important thing to keep in mind here is that if you’re attempting to generate larger images (typically over 768×768 px), you might need to resort to smaller batch sizes and larger batch count values if you don’t have a lot of VRAM to spare.

When it comes to computation in general, in most cases processing data in parallel is the efficient way to go if only you have a choice to do so.

Batch Count vs. Batch Size – What Is The Difference?

So, in the end, we learned that the batch size is the value that dictates the number of images generated in parallel and the batch size setting tells Stable Diffusion how many said batches of images to generate back to back.

If you want to generate large amounts of images in one go and you’re short on VRAM you will have to lower your batch size value and up your batch count value, as the more images there are in one batch, the more VRAM Stable Diffusion will require from your GPU during the generation process.

Generating one batch of 10 images vs. generating 10 batches consisting of one image will generally always be at least a little bit faster because of how parallel processing is implemented in Stable Diffusion, if only you have the VRAM to spare.

Check out our Stable Diffusion setup guide for low-VRAM systems if you’re struggling with out of memory errors when generating your pictures:

Stay tuned!