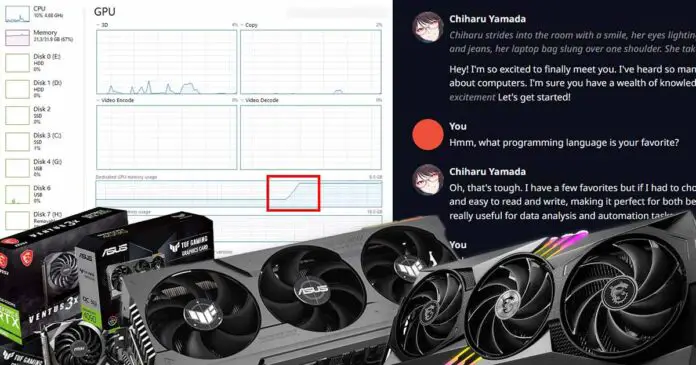

Oobabooga WebUI, koboldcpp, in fact, any other software made for easily accessible local LLM model text generation and chatting with AI models privately have similar best-case scenarios when it comes to the top consumer GPUs you can use with them to maximize performance. Here is my benchmark-backed list of 6 graphics cards I found to be the best for working with various open source large language models locally on your PC. Read on!

Need some more guidance? It’s best to start here – Beginner’s Guide To Local LLMs – How To Get Started (Hardware & Software)

This web portal is reader-supported, and is a part of the AliExpress Partner Program, Amazon Services LLC Associates Program and the eBay Partner Network. When you buy using links on our site, we may earn an affiliate commission.

What Are The GPU Requirements For Local AI Text Generation?

Contrary to popular belief, for basic AI text generation with a small context window, you don’t really need to have the absolute latest hardware – check out my tutorial here!

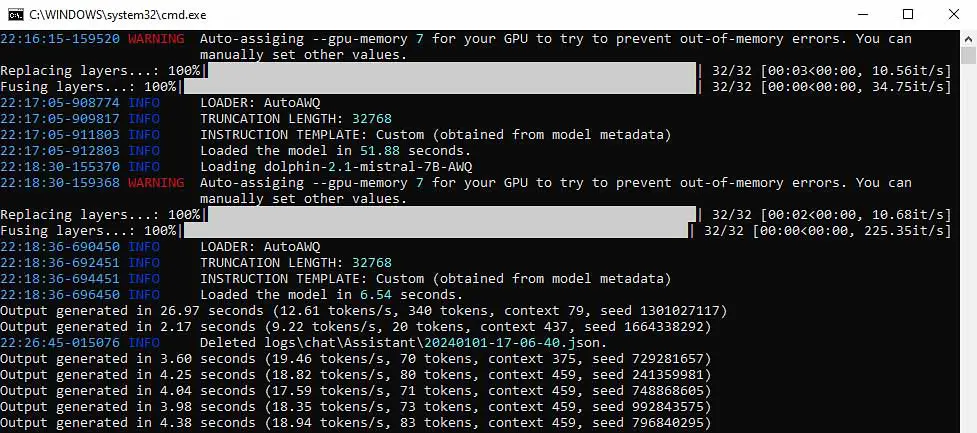

Running open-source large language models locally is not only possible but extremely simple. If you’ve come across my guides on the topic, you already know that you can run them on GPUs with less than 8GB of VRAM, or even without having a GPU in your system at all! But running the models isn’t quite enough. In an ideal world, you want to get responses as fast as possible. For that, you need a GPU that is up to the task.

So, what are the things you should be looking for in a graphics card that is to be used for AI text generation with LLMs? One of the most important answers to this question is a high amount of VRAM.

VRAM is the memory located directly on your GPU that is used when your graphics card processes data. When you run out of VRAM, the GPU has to “outsource” the data that doesn’t fit in its own memory to the main system RAM. This is when trouble begins.

While your main system RAM is also very fast, the issue is that the time required to send the data from the GPU to system RAM and back is what causes extreme slowdowns when the VRAM on your graphics card runs out.

Running out of VRAM is not only a problem you might encounter when using LLMs but also when generating images with Stable Diffusion, doing AI vocal covers for popular songs (see my guide for that here), and many other activities involving locally hosted artificial intelligence models.

Many other variables count here as well. The number of tensor cores, the amount and speed of cache memory, and the memory bandwidth of your GPU are also crucial. However, you can rest assured that all of the GPUs listed below meet the conditions that make them top-notch choices for use with various AI models. If you want to learn even more about the technicalities involved, check out this neat explainer article here!

How Much VRAM Do You Really Need?

The straightforward answer is: as much as you can get. The reality, however, is that when it comes to consumer-grade graphics cards, there are very few options with more than 24GB of VRAM. The NVIDIA RTX 5090, with its 32GB of VRAM, is a notable exception. Another great high-end choice in that matter would be the NVIDIA RTX 4090, which I’ll cover in a short while.

The only other viable way to get more operational VRAM is to connect multiple GPUs to your system, which requires both technical skills and the right base hardware. In general, though, 24GB of VRAM on a single GPU will be able to handle most larger models you throw at it and is more than enough for most applications!

Check out my longer, and even more accurate answer to this question here: LLMs & Their Size In VRAM Explained – Quantizations, Context, KV-Cache

Is 8GB Of VRAM Enough For Playing Around With LLMs?

Yes, you can run some smaller LLM models even on an 8GB VRAM system. As a matter of fact, I did that exact thing in this guide on running LLM models for local AI assistant roleplay chats, reaching speeds up to around 20 tokens per second with a small context window on my old trusted NVIDIA RTX 2070 SUPER (a short 2-3 sentence message generated in just a few seconds).

While you can certainly run some smaller and lower-quality LLMs on an 8GB graphics card, if you want higher output quality and reasonable generation speeds with larger context windows, you should really only consider cards having between 12GB and 32GB of VRAM. These are exactly the cards I’m about to list for you!

You might also like: 7 Best AMD Motherboards For Dual GPU LLM Builds – Full Guide

Should You Consider Cards From AMD?

In the past, NVIDIA has been the go-to recommendation for local AI and deep learning due to its mature CUDA ecosystem. However, the situation is rapidly changing. AMD’s ROCm (Radeon Open Compute Platform) has seen significant improvements, and many popular open-source LLM software options like LM Studio and Ollama now have different support for AMD GPUs.

While NVIDIA still holds an advantage in terms of out-of-the-box compatibility with the widest range of AI applications, AMD has become a strong contender, especially for those who in some cases are willing to do a little more initial setup. The primary advantage of considering AMD is often a better price-to-VRAM ratio, which is a critical factor for running larger language models.

Cards like the Radeon RX 7900 XTX with 24GB of VRAM offer a compelling alternative to NVIDIA’s offerings. For those comfortable with a bit of tinkering and looking for excellent value, AMD is a more viable option than ever before.

If you want to check out a list of AMD GPUs I recommend for local LLM software, you’re in luck! I’ve put one together for you here: 6 Best AMD Cards For Local AI & LLMs In Recent Months

Can Your Run LLMs Locally On Just Your CPU?

Absolutely, but in many cases it will be much slower than running the models on your GPU. You can run many powerful, open-source LLMs locally using just your computer’s CPU. This is made possible by compatible inference software like for instance KoboldCpp, that can manage the process for you, loading the AI models directly into your system’s RAM. It’s an excellent way to generate text using AI without even having a GPU installed.

Be aware that performance on a CPU can generally be much slower compared to using a dedicated graphics card, especially with the largest and most capable models. That said, it’s a fantastic and accessible entry point into the world of local AI.

Now let’s move on to the actual list of the graphics cards that have proven to be the absolute best when it comes to local AI LLM-based text generation. Here we go!

Best GPUs For Local LLMs In 2025 – My Top List

1. NVIDIA RTX 5090 32GB

As of now, the NVIDIA RTX 5090 is the most powerful consumer-grade GPU your money can get you. While it’s certainly not cheap, there are no better-performing options out there. From the entire 5th generation NVIDIA RTX series, only the RTX 5090 features as much as 32GB of GDDR7 VRAM.

This massive memory pool makes it the undisputed king for handling many of the larger local open-source LLMs on a single GPU. Other cards from the lineup, like the RTX 5080 and RTX 5070 Ti, offer 16GB of VRAM, while the base RTX 5070 has only 12GB of video memory on board. For those who want the absolute best, this is the best option to go for (and, unfortunately, the most pricey one).

2. NVIDIA RTX 4090 24GB

The NVIDIA RTX 4090 is the fastest consumer-grade GPU in the 4th generation lineup. If you want top-notch hardware for playing around with AI without moving to 5th generation cards, this is it. The RTX 4090 24GB is without question the second-best choice for local LLM inference and LoRA training.

It can offer amazing generation speeds, even up to around 30-50 t/s (tokens per second) with the right configuration. The performance of the 4090 is stellar, and it will likely remain a powerhouse for quite some time. u/VectorD over on Reddit even chained 4 of these together for his ultimate setup for handling even the most demanding local LLMs. Check it out if you need some inspiration for a multi-GPU rig like this.

3. AMD Radeon RX 7900 XTX 24GB

A powerful contender for the top spots, the AMD Radeon RX 7900 XTX comes with 24GB of VRAM on board, matching the capacity of the RTX 4090 and 3090. This makes it an excellent choice for running large models that demand significant memory.

With the continued maturation of AMD’s ROCm software platform, the 7900 XTX has become a highly viable and often more budget-friendly alternative to NVIDIA’s high-end offerings. For users who prioritize VRAM capacity and value, and don’t mind the somewhat less mature and less broadly compatible software ecosystem, the 7900 XTX is one of the best deals on the market for a 24GB card.

4. NVIDIA RTX 3090 / 3090 Ti 24GB

With the NVIDIA RTX 3090 and 3090 Ti, we’re stepping down in price even more, but surprisingly, without sacrificing VRAM. Both cards offer a generous 24GB of video memory with the same memory bus width as the 4090, making them some of the most cost-effective options for serious local AI work. The 3090 series is still among the most commonly chosen GPUs for LLM use for this very reason.

While the newer 4th and 5th generation cards are faster, the RTX 3090 and its slightly more powerful Ti sibling offer a great combination of price, performance, and VRAM capacity available for a very good price when you search for second-hand units. This one is one of the first options you should look at if you don’t want to overpay for the newest options from NVIDIA.

5. NVIDIA RTX 4070 Ti Super 16GB

The NVIDIA RTX 4070 Ti Super is the card which has, as it was intended, surpassed the original RTX 4070 Ti in terms of performance. This card isn’t just a slightly faster version of its older sibling, but it also got a significant VRAM upgrade to 16GB of GDDR6X video memory and a wider 256-bit memory bus.

This places the 4070 Ti Super in a sweet spot, offering more memory than the 12GB cards below it and providing excellent performance for its price, if 16GB is enough memory for your particular workflows. It’s a card that is really worth it if you’re upgrading from a previous generation or just starting out and need a powerful, future-proof option without stretching the budget to a 24GB GPU.

6. AMD Radeon RX 7900 XT 20GB

Another strong entry from AMD, the Radeon RX 7900 XT carves out a pretty unique position with its 20GB of VRAM. This is a significant step up from the 16GB found on many competing NVIDIA cards in the same price bracket, giving you more breathing room for larger models or higher context windows, but still less than the 24GB of VRAM available on the 7900 XTX.

It provides a great balance of strong performance and a generous memory pool, at the same time being more affordable than the XTX model. As with its bigger brother, the 7900 XTX, it leverages the growing support for AMD GPUs in the AI community, offering excellent value, provided you’re ready to incorporate a non-CUDA GPU in your local LLM workflows.

7. NVIDIA RTX 4080 Super 16GB

The NVIDIA RTX 4080 Super is a very powerful GPU that comes right after the 4090 in the 4th generation lineup when it comes to raw performance. Where it lacks, however, is the VRAM department. The most important difference is that the RTX 4080 Super maxes out at 16GB of GDDR6X VRAM, which is significantly less than what the 24GB cards have to offer.

As we’ve already established, for running large language models locally, you ideally want as much VRAM as you can possibly get. Still, the 4080 Super offers great way-above-average performance and can yield surprisingly good results in text generation speed if your smaller models are able to fit in the constraints of its video memory. A very good pick, if you can find it for a good price and are good with 16GB of VRAM on board.

8. NVIDIA RTX 3060 12GB

NVIDIA RTX 3060 with 12GB of VRAM and a pretty low current second-hand/refurbished market price is, in my book, the absolute best tight-budget choice for local AI enthusiasts, both for LLMs and image generation. I can already hear you asking: why is that? Well, the prices of the RTX 3060 have already fallen quite substantially, and its performance did not.

Although this amount of VRAM is nothing spectacular, this card allows you to run most 7B or 13B models with moderate quantization at decent text generation speeds. With the right models chosen, and right configuration, you can get almost instant generations in many low- to medium-context window scenarios. If your budget is tight, this is the one that might interest you the most.

Interested in other budget GPU options for local AI software? Check this out: Top 7 Best Budget GPUs for AI & LLM Workflows