Can you run ChatGPT-like large language models locally on your average-spec PC and get fast quality responses while maintaining full data privacy? Well, yes, with some advantages over traditional LLMs and GPT models, but also, some important drawbacks. The best thing is, it’s absolutely free, and with the help of Gpt4All you can try it right now! Let’s get right into the tutorial!

Check out also: How To Use Okada Live Voice Changer Client RVC With Discord – Quick Guide

What Is Gpt4All Exactly?

Gpt4All developed by Nomic AI, allows you to run many publicly available large language models (LLMs) and chat with different GPT-like models on consumer grade hardware (your PC or laptop).

What this means is that it lets you enjoy a ChatGPT-like experience locally on your computer, relatively quick, and without sharing your chat data with any 3rd parties!

Gpt4All is available both for Windows and MacOS, and Linux operating systems.

The exact installation instructions are available on the official Gpt4All website, as well as its official GitHub page. Hint: It has a simple one-click installer so you don’t have to mess around with console commands or anything like that to get it working right away!

What Is Gpt4All Capable Of?

The direct answer is: it depends on the language model you decide to use with it. There are several free open-source language models available for download both through the Gpt4All interface, and on their official website. We’re going to elaborate on this in a short while.

Each of these models is trained on different datasets, and is capable of providing you with different outputs, both in terms of style and overall quality.

You can use Gpt4All as your personal AI assistant, code generation tool, for roleplaying, simple data formatting and much more – essentially for every purpose you would normally use other LLMs, or ChatGPT for.

Which Language Models Can You Use with Gpt4All?

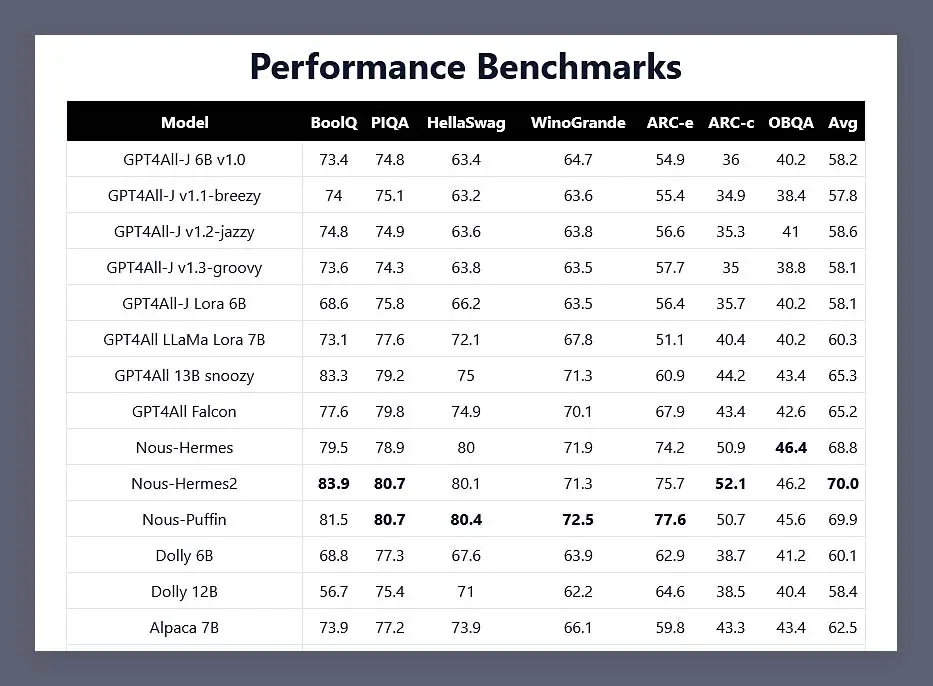

Currently, Gpt4All supports GPT-J, LLaMA, Replit, MPT, Falcon and StarCoder type models. The main differences between these model architectures are the licenses which they make use of, and slight different performance.

Now, let’s get to the actual models that are available to you right away after visiting the official Gpt4All website, or straight from the Gpt4All GUI!

Available Free Language Models

There are many different free Gpt4All models to choose from, all of them trained on different datasets and have different qualities. The models are usually around 3-10 GB files that can be imported into the Gpt4All client (a model you import will be loaded into RAM during runtime, so make sure you have enough memory on your system).

The most popular models you can use with Gpt4All are all listed on the official Gpt4All website, and are available for free download. Here are some of them:

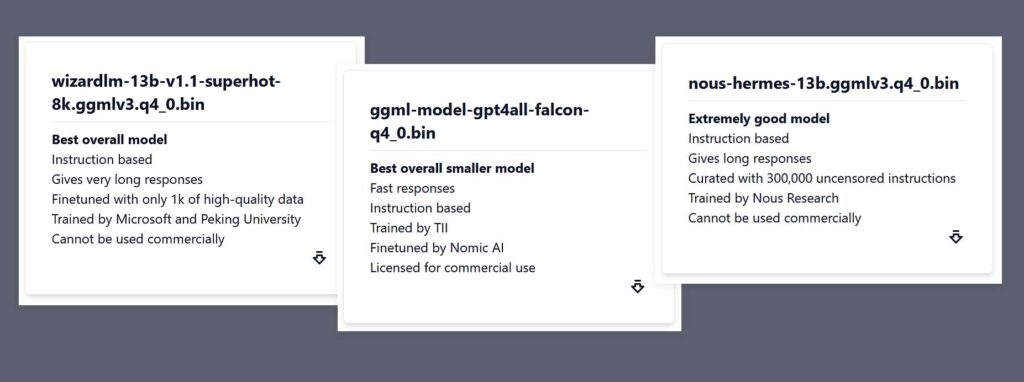

- Wizard LM 13b (wizardlm-13b-v1.1-superhot-8k.ggmlv3.q4_0) – Deemed the best currently available model by Nomic AI, trained by Microsoft and Peking University, non-commercial use only.

- Hermes (nous-hermes-13b.ggmlv3.q4_0) – Great quality uncensored model capable of long and concise responses. Train by Nous Research, commercial use not allowed.

- Ggml Gpt4All Falcon (ggml-model-gpt4all-falcon-q4_0) – Small size, mode fine-tuned by Nomic AI, commercial use allowed.

- LLaMA2 (llama-2-7b-chat.ggmlv3.q4_0) – Trained by Meta AI, optimized for dialogue, commercial use allowed.

- …and much more!

Each of these has its own upsides and downsides and can be used to get vastly different outputs from the very same prompts and generation settings. Download a few and try for yourself – all of these are available for free!

Is Gpt4All GPT-4?

GPT-4 is a proprietary language model trained by OpenAI. As of now, nobody except OpenAI has access to the model itself, and the customers can use it only either through the OpenAI website, or via API developer access. GPT-4 as a language model is a closed source product.

Gpt4All on the other hand, is a program that lets you load in and make use of a plenty of different open-source models, each of which you need to download onto your system to use.

The original GPT-4 model by OpenAI is not available for download as it’s a closed-source proprietary model, and so, the Gpt4All client isn’t able to make use of the original GPT-4 model for text generation in any way.

Keep in mind however, that many of the free open-source models that you can use with Gpt4All, are trained partially or fully on different query responses taken from GPT-4 outputs. This means that in theory, you can get outputs that are somewhat similar to GPT-4 outputs from certain publicly available LLMs. In practice, the output quality varies by a lot between different models and different queries/prompts. Keep on reading for examples!

Is Gpt4All Similar To ChatGPT?

In a sense, yes. However, as we’ve already mentioned, the language models that Gpt4All uses, can in many places be inferior to the gpt-3.5-turbo model that OpenAI’s ChatGPT makes use of.

Just like with ChatGPT, you can attempt to use any Gpt4All compatible model as your smart AI assistant, roleplay companion or neat coding helper. Just don’t expect similar output quality for all the queries/prompts – at least for now.

So in short – while both Gpt4All and ChatGPT are AI assistants based on large language models, their output quality with the same input prompts supplied will differ – sometimes by a lot.

You might also like: 7 Benefits Of Upgrading Your GPU – Is It Worth It?

Gpt4All vs. ChatGPT – Quick Comparison

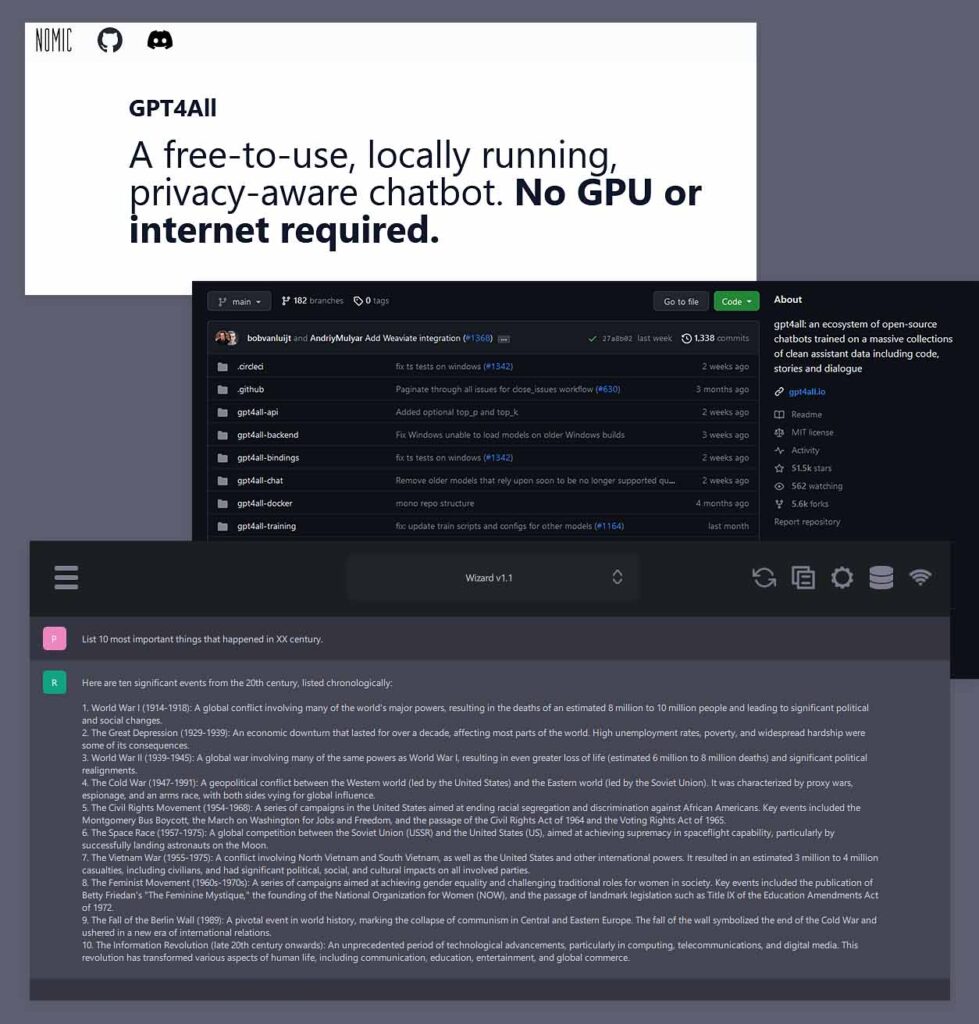

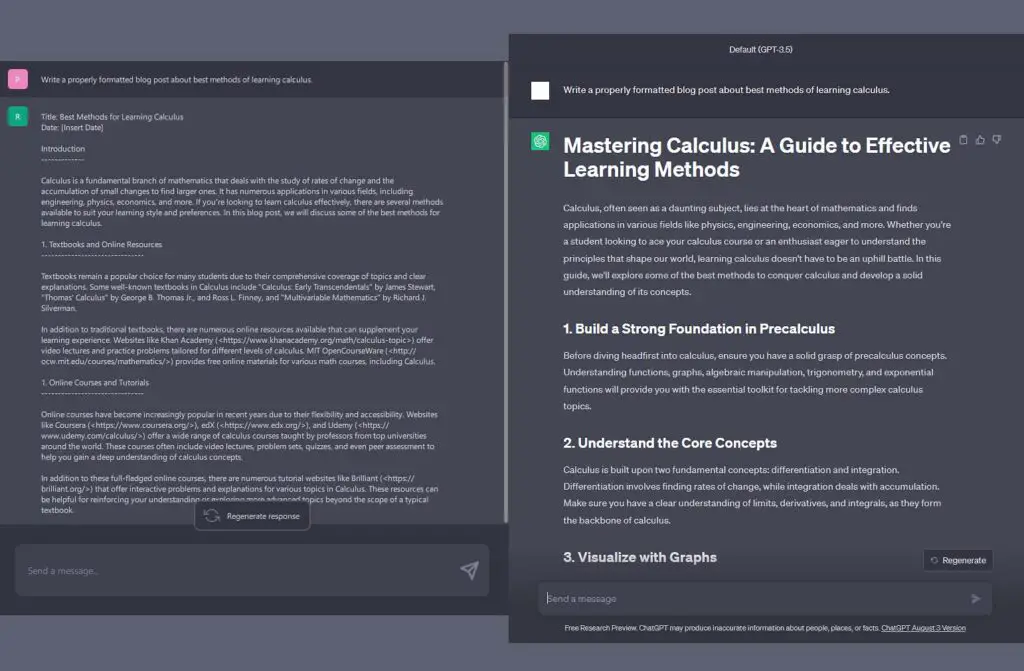

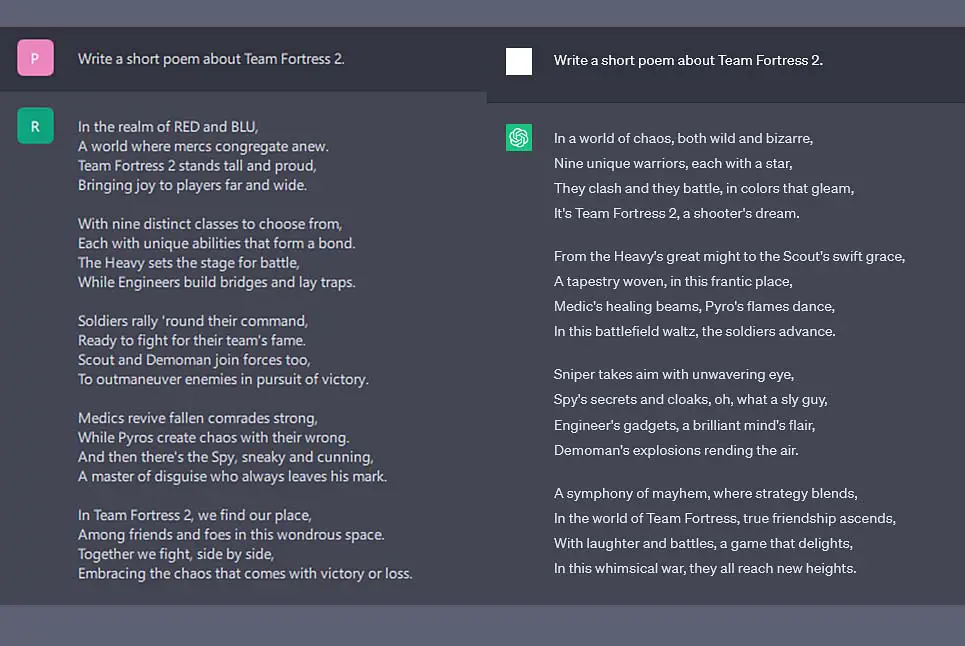

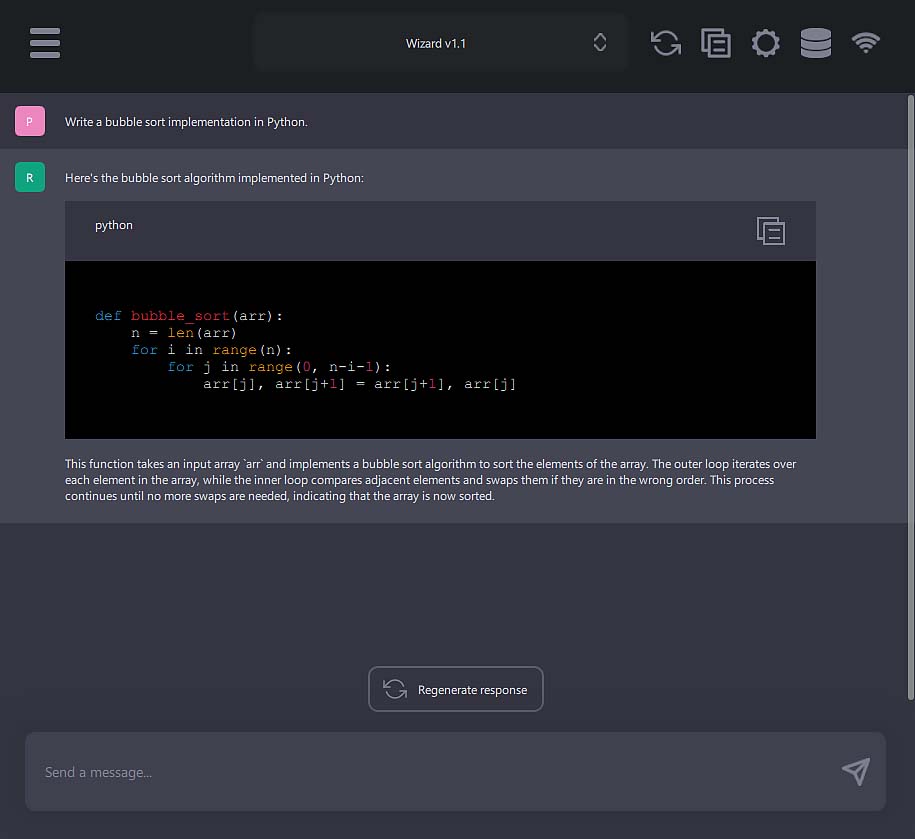

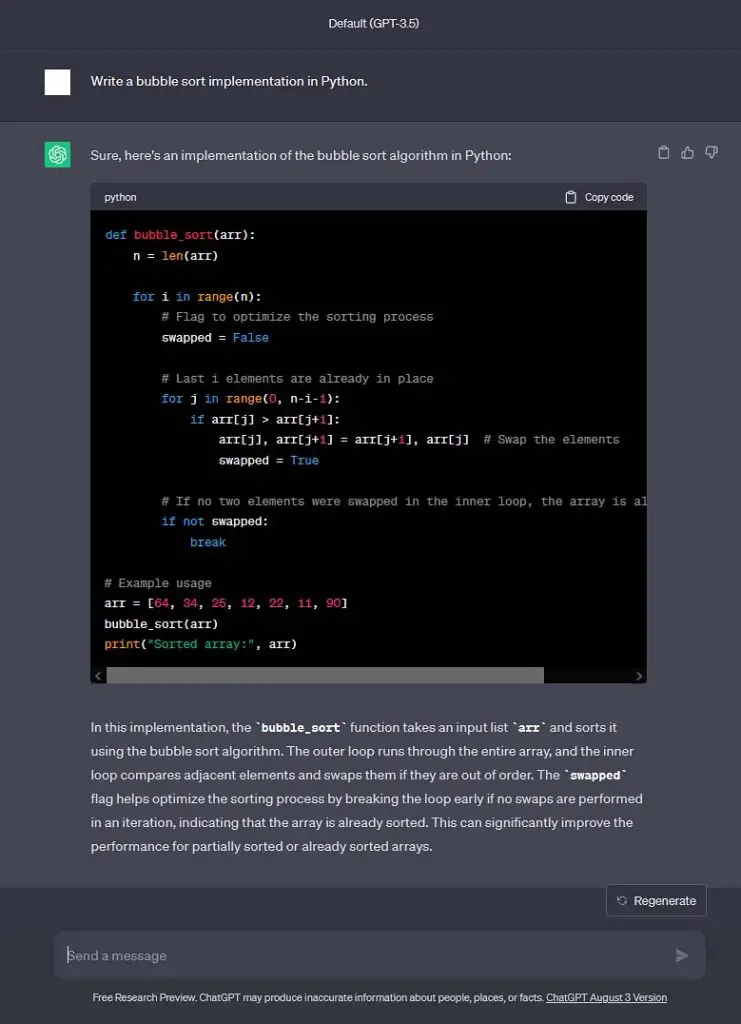

So what about the output quality? As we’ve been already mentioning this a lot, here are two examples of generated answers for basic prompts both by ChatGPT (making use of the gpt-3.5 Turbo model), and Gpt4All (with the Wizard LM 13b model loaded). Take a look.

Important note: These comparisons are only to give you the rough idea of what to expect when trying out Gpt4All for the very first time with the default Wizard v1.1 model. All the prompts were tested with default settings. All the generated responses are the first ones returned by the model without message regeneration.

While not much can be changed in terms of generation settings when it comes to using ChatGPT through the OpenAI website, Gpt4All model settings can be freely tweaked inside the client. This means that the outputs shown could be enhanced by further setting tweaks. Keep that in mind.

The first task was to generate a short poem about the game Team Fortress 2. As you can see on the image above, both Gpt4All with the Wizard v1.1 model loaded, and ChatGPT with gpt-3.5-turbo did reasonably well. Let’s move on!

The second task was to generate a bubble sort algorithm in Python. The Wizard v1.1 model in Gpt4All went with a shorter answer complimented by a short comment.

ChatGPT with gpt-3.5-turbo took a longer route with example usage of the written function and a longer explanation of the generated code. Let’s move on to the third task, a little bit more complex task when it comes to natural language.

The third task was to generate a blog article styled piece of content about learning calculus with unspecified “proper formatting”. Only parts of the generated output are shown on the image.

While Gpt4All did reasonably well here in terms of the content, it didn’t come up with any reasonably useful formatting by itself after the implied “proper formatting” in-prompt suggestion. ChatGPT on the other hand, returned the answer formatted in markdown without any additional formatting instructions needed.

While the overall structural integrity of the answer from ChatGPT was a bit better, both were completely acceptable answers to the given question.

As you can see, depending on the prompt, the used language model, and when it comes to Gpt4All – chosen settings, the output can be vastly different. Luckily, experimenting with Gpt4All is (and always will be) 100% free, so it doesn’t hurt to try it out for a bit with various queries using different available models!

Privacy Concerns – Is Gpt4All Offline?

Privacy is really important for many people engaging in conversations with various AI assistants. The truth is, that every bit of data shared in an non-offline conversation with an LLM based AI assistant such as ChatGPT has to be shared with the AI assistant service supplier’s server and processed outside of your machine. Only then, the generated answer to your query can be sent back to you over the internet. It’s different when it comes to locally run models.

Different companies selling access to their online AI tools have different data protection and handling policies, and different ways of dealing with further model training and fine-tuning using the user generated data. To see what OpenAI (and therefore ChatGPT) does with your conversation data on a regular basis, take a look in their official privacy policy statement.

Gpt4All on the other hand, processes all of your conversation data locally – that is, without sending it to any other remote server anywhere on the internet. In fact, it doesn’t even need active internet connection to work if you already have the models you want to use downloaded onto your system!

The Gpt4All client however has an option to automatically share your conversation data which will later on be used for language model training purposes. If you care about your conversation data not being leaked anywhere outside your local system, be sure that the option for contributing your data to the Gpt4All Opensource Datalake is disabled, as it should be by default. (see the image above).

Here is a citation from the Gpt4All Opensource Datalake statement which you can see near the data sharing option in the related setting menu:

NOTE: By turning on this feature, you will be sending your data to the GPT4All Open Source Datalake. You should have no expectation of chat privacy when this feature is enabled. You should; however, have an expectation of an optional attribution if you wish. Your chat data will be openly available for anyone to download and will be used by Nomic AI to improve future GPT4All models. Nomic AI will retain all attribution information attached to your data and you will be credited as a contributor to any GPT4All model release that uses your data!Remember to leave this setting off if you want all the privacy Gpt4All offers!

Does Gpt4All Use Or Support GPU? – Updated

Newer versions of Gpt4All do support GPU inference, including the support for AMD graphics cards with a custom GPU backend based on Vulkan.

It is no longer the case that the software works only on CPU, which is quite honestly great to hear.

So, Is Gpt4All Any Good?

All in all, Gpt4All is a great project to get into if you’re all about training, fine-tuning, or simply just utilizing compatible free open-source large language models available online locally on your system. In terms of giving you access to many setting tweaks and ensuring the privacy of your conversation data it can be deemed “better than ChatGPT”, in terms of output quality however, sometimes it falls short when compared to commercial solutions.

If all this sounds interesting to you, don’t hesitate and try out Gpt4All by downloading their one-click installer straight from their official website. Enjoy!