So, watermarks appear in your photos and images generated using Stable Diffusion 1.5, 2.1 and other SD based models. Why is that? Does that mean that your AI generations are plagiarized? In this article we will go in-depth on the reasons why do stock photo watermarks such as the Dreamstime marketplace watermark appear in Stable Diffusion AI generated images, how to prevent that and what does it actually mean for you and the generative AI models future in general. Let us explain!

Author’s note: As a stock photographer and a digital content creator I might have a bit of a bias towards protecting the rights of all content creators alike. However, as a person also actively engaged in the AI technology development I might also take a bit of a bias to the other side in the name of widely defined “progress”. Regardless of that, all my takes are completely honest here so I hope you’ll take quite a bit out of this detailed write-up!

- The reason upfront

- Stock photo watermarks in Stable Diffusion outputs

- Artist watermarks in Stable Diffusion images

- Could this be prevented in any way?

- Are you able to remove the watermark from the Stable Diffusion images?

- How to remove the watermark from Stable Diffusion generated photos?

- If my generated image contains a watermark, is it copyright protected?

- Can you actually sell AI generated images in stock photo agencies?

- What to make of all this?

Check out our full Stable Diffusion AI image generation guide for beginners here: Stable Diffusion WebUI Settings Explained – Beginners Guide

The reason upfront

The simple reason for watermarks appearing in Stable Diffusion generated images is: a substantial number of photos containing watermarks were used to train the Stable Diffusion AI model you’re using to generate your images. As the watermarked images were present in the training set in high volumes, there is also a high chance that the output images with certain prompts will end up having a stock photo site watermark on them.

If that answer is enough for you, that’s great, but if you’re eager to know even more about this rather complicated situation, read on!

Stock photo watermarks in Stable Diffusion outputs

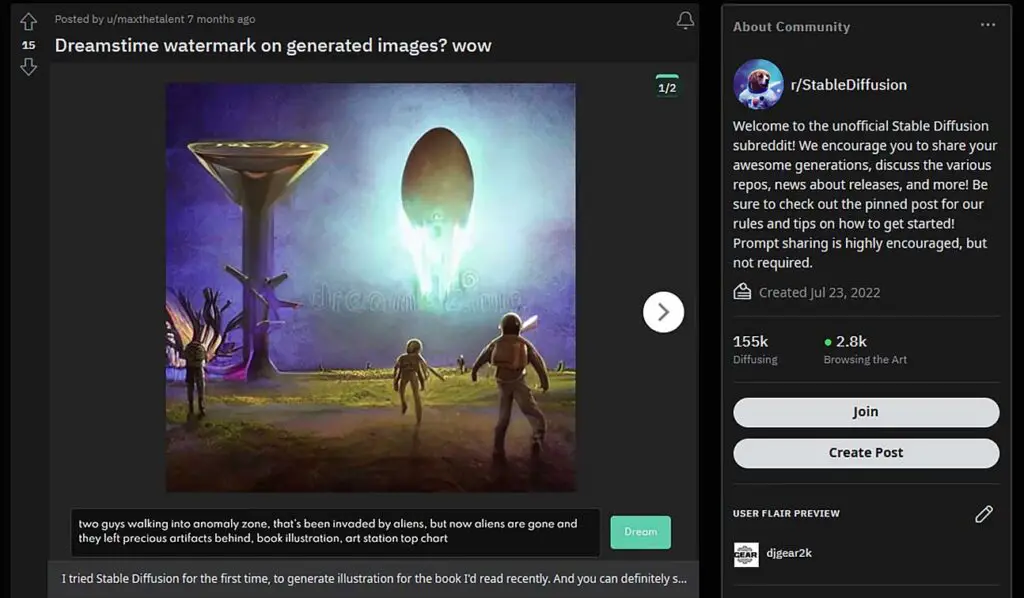

As we’ve said, stock photo watermarks appearing in your AI generated images are there because stock photos containing various forms of watermark copyright protection were actually present in the training dataset of the model you’re using – supposedly in rather high volumes. One of the most prevalent watermarks appearing in SD generations seems to be the Dreamstime agency watermark, judging by our experiments with Stable Diffusion 1.5, 2.1 and several other SD based models.

This phenomenon is one of the reasons why Getty Images – a large stock photo distribution company has actually sued Stable Diffusion because of the use of their copyrighted material in the LAION image dataset which SD was trained with (more on that in a short while).

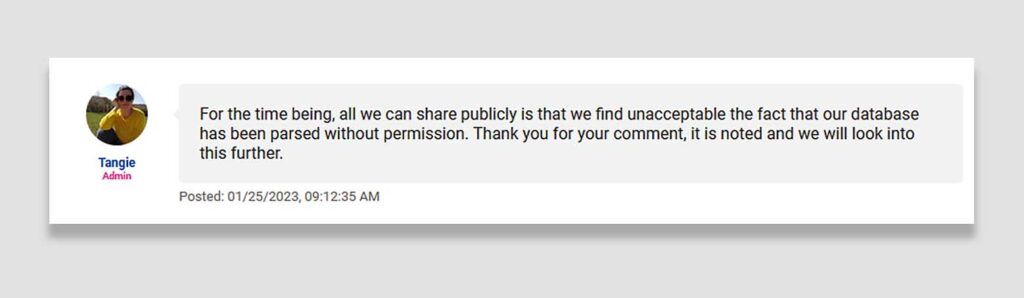

The issue is actively discussed in the Dreamstime stock agency community as well, as it also suffered from their content being used to train the LAION datasets.

The main reason for stock photo watermarks appearing in these datasets is that they sourced their training material directly from the internet (you can think of it as picking seemingly at random a few hundred million pictures from Google Images to train the AI model). That huge sample was bound to have quite a lot images containing watermarks, given that stock photos are often quite a large chunk of most visual imagery related search queries across various search engines.

You might also like: CRT Monitors Today – Pros & Cons + Availability

Artist watermarks in Stable Diffusion images

While stock photo watermarks are the most prevalent unwanted elements in Stable Diffusion generated photos, another thing that might appear in your generations is an artist or a random art-related site watermark.

The reason for that is the exact same one as the one we presented when it comes to stock photo watermarks. Various forms of visual art copy protection however, have a much lower chance of appearing when using generic Stable Diffusion prompts with the original model, as stock photo images seem to be a larger part of the training material we mentioned.

If you’re using a custom model/checkpoint that was trained using images from art-related sites, the probability of an artist watermark or signature appearing on your generated image can grow significantly. Again, in the end it always depends on the actual training set contents.

Could this be prevented in any way?

The stock agency watermark problems could have been potentially mitigated, if before the initial training process of the LAION datasets the photos containing distinct agency watermarks would have been in some way detected, filtered and removed from the training dataset.

This however, taking into account that even the very first LAION dataset which the first Stable Diffusion model made use of for training contained over 400 million images, would have required a certain amount of effort and processing power if the photos containing stock agency logos would to be removed from the training set. In theory however, it should have been doable.

Today, large numbers of newly created Stable Diffusion model checkpoints simply make use of various Stable Diffusion model versions as their base. As all Stable Diffusion models have been trained on different versions of LAION datasets, all of which contain watermarked images in them, at this point preventing the watermarks from appearing in images generated using all the new SD derivative models would be much harder.

As new checkpoints based on the LAION-trained models emerge, there still is a chance that you will be faced with a artist or stock agency watermark in your generations if you’re making use of such models.

Are you able to remove the watermark from the Stable Diffusion images?

You can try and prevent the watermark from appearing by simply adding a “watermark” keyword to your negative prompts before beginning the generation process. If this doesn’t work you can try and increase the weight of the “watermark” keyword.

Keep in mind that adding keywords such as “stock photo”, or “stock photography” to your prompts, might actually increase the chances of a watermark appearing on your generated images. This is to be expected, as the output will be then biased towards the “stock photo” labeled part of the training dataset which of course will contain a large amount of images with a watermark on them.

See also: Stable Diffusion Prompts How To For Beginners (Quickly Explained!)

Note: While removing watermarks from Stable Diffusion generated images isn’t really an issue, don’t ever attempt to remove any form of copy protection from stock photo image samples. This is an illegal activity and it mostly hurts the authors of the photos in question.

How to remove the watermark from Stable Diffusion generated photos?

If you weren’t able to prevent the watermark being generated using Stable Diffusion, in general there are three things you can do.

- Remove the watermark from the generated image using 3rd party image editing software (such as Photoshop).

- Attempt to remove it using the Stable Diffusion WebUI inpainting feature.

- Discard the generated image and attempt to generate another one, this time with a “watermark” added as a negative prompt with an increased weight.

If my generated image contains a watermark, is it copyright protected?

Although we aren’t here to provide legal advice and the legal situation concerning AI generated images varies from model to model and from institution to institution, the one thing is certain. Given the way latent diffusion image generation works, all the images generated by the SD models should be in a mathematical sense completely unique content created based on the initial model training dataset.

If the generated image contains a watermark it doesn’t mean that the actual generated photo itself was taken straight from a copyright protected resource. It simply means that the model you chose to generate the photo with was trained using a large amount of watermarked images in its training set which is affecting the output image features when it comes to certain input prompts.

Whether or not the image you generated is your actual property in a legal sense depends both on the model you’re using and the app/website/software you used to generate it. You can find this kind of information in a place you got your model from and in the official ToS of the tool you’re using.

There isn’t really a high chance of generating an actual 1:1 existing stock photo using the base Stable Diffusion models, although there always might be a slight chance of generating an image that can in some ways resemble a photo that already exists somewhere out there.

Can you actually sell AI generated images in stock photo agencies?

This varies from agency to agency. In general there will be two opposite stands taken by stock photo agencies – either they will accept AI generated content into their collections provided the seller holds the actual copyrights to the content and the content itself complies with their ToS, or they won’t accept any kind of AI generated content at all.

Among the agencies that you can sell AI generated images with are for instance:

Check out also: Is Stock Photography Still Worth It? – An Honest Take

The stock photo agencies that do not allow their users to sell any kind of AI generated content are for example Getty Images together with iStock Photo, as well as Shutterstock which actually uses their contributor’s supplied images to train their own photo generating model that their customers can utilize using their in-house AI image generation tool.

For the full list of stock photo agencies that support (or don’t support) generative AI content check out this extensive list compiled by upstock guru.

What to make of all this?

In the early days (and months) of the AI image generation craze there still are many legal and moral concerns when it comes to the material that’s been created now by thousands of people making use of generative AI tools all over the world.

All in all, the presence of watermarked (or otherwise copyright protected) images in model training datasets is a controversial thing, regardless of the technology involved. There are many ongoing debates on whether or not this kind of material should be filtered from training sets in the future, how to do so reliably, and what to do with images that have been generated using models that were already trained using copyrighted content.

A common statement made by many is that if copyright protected imagery is used to train an AI model, each respective copyright holder should be compensated for the image use in some way, or be able to somehow control whether their work is or is not present in various AI training datasets in advance. The other controversial take on this matter, is that because it would be nearly impossible to compensate each and every owner of copyright protected content present in the chosen training datasets, no compensation is needed at all.

Stay vigilant and wary of rules imposed both by model creators, generation tool authors and official legal bodies, as the situation is bound to change in the upcoming months and years. The generative AI market is rapidly evolving, and many controversies surrounding it can and should be slowly but surely cleared as the time goes by and appropriate solutions are proposed. At least we hope so!

You might also like: Stable Diffusion WebUI Settings Explained – Beginners Guide