The RTX 3090 and its Ti version have been deemed one of the best priced GPUs for AI software by many, including me. Here is my deeper analysis which will hopefully help you decide whether or not the 3090 is still worth purchasing new or used, for all your AI software needs in this year. Let’s get to it!

This web portal is reader-supported, and is a part of the Amazon Services LLC Associates Program and the eBay Partner Network. When you buy using links on our site, we may earn an affiliate commission!

If you’re looking for the best GPU for AI software including the budget options, you should definitely check my personally compiled list of the graphics cards that are by my standards still worth considering this year. Feel free to quickly check it out here: Best GPUs For AI Training & Inference This Year – My Top List

24GB of VRAM For a Good Price?

- Great performance.

- 24GB of VRAM on board.

- Most often chosen model for local AI software.

- Best card from the 3xxx lineup.

- Price to performance ratio.

- Many worthwhile second-hand deals.

- Not quite as powerful as the 4xxx series overall.

- Not the most recent NVIDIA GPU you can get.

If you use any kind of software that deals with deep learning models training and inference, for instance the Kohya GUI for training Stable Diffusion LoRA models, or OobaBooga WebUI for running open-source large language models locally, you know that in most situations you can never have enough VRAM on hand.

Unfortunately, as things are now, the highest amount of video memory you can get on a single standard high-end consumer-grade GPUs is 24GB. And in general, the more VRAM a graphics card has, the more it costs, as sad as the reality is.

Still, there aren’t many graphics cards on the market that have this much VRAM on board, and certainly not all of them are fast enough to consider when going for a reasonably fast graphics card for the best AI performance without going for a more complex and tricky to assemble dual GPU setup.

Two of the first cards that come to mind are the RTX 4090 and the RTX 3090, which both feature 24GB of VRAM, while being from two different generations of NVIDIA GPUs. The first one, much more expensive and much faster, the second one – with the best price-to-performance ratio on the market as of now. And that’s exactly the one we’re going to talk about.

When we’re looking at the NVIDIA RTX 3090 itself, it’s impossible not to compare it to the 4090, as this is one of the first things that most people do when looking for a best GPU to upgrade to with AI-based software in mind.

RTX 3090 Ti vs. RTX 4090 for Machine Learning

Once again as benchmarks made by people with more patience than me say, the RTX 4090 is quite understandably noticeably faster than the 3090 (roughly 10-20% in most performance tests), while also being much more expensive. The power consumption of both cards is roughly the same, and their real-world perceived performance is also pretty close.

Using the 3090 with the most popular open-source AI software like Automatic1111, ComfyUI, Kohya, OobaBooga, AICoverGen, or any other similar software will benefit the most from the large amount of VRAM the card has.

Larger image generation batch sizes and larger batches when fine-tuning models and training LoRAs, the ability to load higher quality LLMs for local inference without aggressive quantization, and in many cases the ability to get through model training processes faster due to the larger amount of operational memory is with no doubt the biggest advantage an upgrade to a 3090/3090 Ti can give you. And all this for a very good price, compared to the very same 24GB of VRAM on a brand new 4090.

The main clock speed difference is there and it would be rather unwise to say otherwise, but in the grand scheme of things the only thing that in my honest opinion could be holding you back from getting a 4090 instead is that the performance jump isn’t really price-justified, especially when it comes to the real-life examples proven by user comparisons like this one.

When it comes to the gaming capabilities, there is not much to write about here, as with both the cards you will be able to run most AAA titles up to ~2023 with high settings on 1080p and 2k displays. The 4090 however will be a more future-proof option in that department. Remember that the 3xxx series is already a few years old at this point, and while it’s still more than capable of 2k gaming, it will become obsolete just a little bit earlier than the 4th generation of NVIDIA hardware.

And this takes us to this quick segment which is…

How Does The RTX 3090 Differ From Its Ti Version?

The difference is about what you would expect from other base NVIDIA GPUs compared to their “Ti” upgrades. And this is what it looks like for the 3090 and the 3090 Ti.

The NVIDIA RTX 3090 Ti is roughly 10-15% faster than the base 3090 model, which came out 2 years prior. Both share the maximum amount of 24GB video memory, the 3090 Ti having slightly faster memory clock on board and a little bit more memory bandwidth. It also has a TDP of 450 Watts, which is 100W higher than the original 3090.

In general, the speed difference is there, but it’s rather small, at least when compared to the visibly larger gap between the 3xxx and 4xxx series which I’ve already touched upon when mentioning the differences between the 3090 and the 4090 models.

What Really Makes The RTX 3090 Worth Considering?

In benchmark tests, the RTX 3090 scores noticeably better than the much older RTX 3080, and practically orders of magnitude better than the RTX 3070. The 3090 is capable of being connected with other compatible NVIDIA GPUs via the NVLink connection. The 3080 and the 3070 do not give you that option at all.

This makes the RTX 3090 alongside with its Ti version two very best NVIDIA cards from the whole 3xxx hardware generation, and in my book, the only ones really worth considering at this point in time.

What’s even cooler, if you’d like to go crazy and connect two 3090’s with each other utilizing the NVLink connection you would be able to access a nice round amount of 48GB of VRAM, which is enough for most AI related tasks you could think of performing in a home lab environment.

If you’re looking for the best card for locally hosted LLM models, I’ve got a comparison list I compiled just for you! – Best GPUs For Local LLMs This Year (My Top Picks!)

The Question That Many Avoid – Should You Get a Used RTX 3090?

And my opinion on this is: it depends on one thing – whether or not you’re able to get a working unit in a reasonably good condition. The thing with getting graphics cards used, is that there is no guarantee that you will get a card that is up to your expectations. The good thing is, that most of the time if a card that you got second-hand works just fine, it most probably won’t break down on you as soon as you might fear. In the end it’s really a mixture of luck and the seller’s honesty. I, being pretty lucky with my purchases can only say so much.

The RTX 3090, which is already a model from the previous 3xxx GPU generation, will naturally become cheaper as the time goes by. Arguably I would say that now is the best time to get a second-hand 3090. The market starts to slowly get populated by used models as many people are getting their new 4xxx GPUs and naturally begin to sell their old graphics cards after the upgrade.

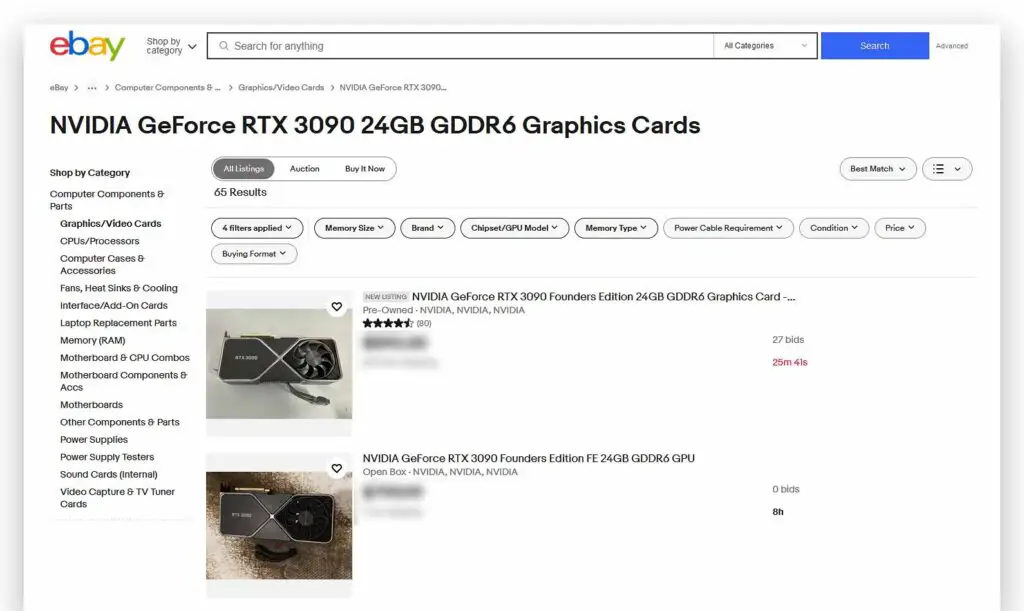

Where to look for the best deals? Well, for me one of the best places to look at right now is simply the eBay marketplace, if not for the final purchase, to simply get an idea of the current second-hand prices for your chosen GPU. And believe me, the used 3090 and 3090 Ti prices can get really low compared to the new units which still do circulate on the market.

Make sure to ask the previous owner about the state of the purchased card, and the way it was used before it was put up for sale. I would not purchase a card that was extensively used for mining, but if you’re willing to take the risk you could get your GPU even cheaper. A changed thermal compound and thermo pads are always a nice thing, although many GPUs can become market-obsolete long before they start to seriously suffer from thermal issues caused by the lack of servicing. Also, make sure that the GPU that’s being sold to you actually works. There are many cases in which only a single line of text on the bottom of the listing tells you that the card has been damaged, or is otherwise in an unusable state.

All in all, I’ve purchased a few used GPUs, and in all cases have got my money’s worth. Just be mindful of the basic second-hand tech shopping rules and you should be fine!