Do you need a “pruned” model, or a regular Stable Diffusion model? How do these two differ? Well, we’re going to explain this in a few short paragraphs and then also show you how you can prune a SD model yourself. Read on!

- What Is “Pruning” In Machine Learning?

- How Does Pruning Actually Work?

- Does Pruning a Model Degrade It’s Quality?

- Pruned vs. Unpruned Models In Stable Diffusion

- Should You Use a Pruned Model For Training?

- How Do You Prune a Stable Diffusion Model?

You might also like: Ckpt vs. Safetensors In Stable Diffusion (Differences & Safety)

What Is “Pruning” In Machine Learning?

The simplest definition of pruning in the world of machine learning and more specifically deep learning would be – trimming unnecessary weights/parameters in neural networks that do not add to the network’s performance, but do take up space in the final model file.

Pruning is a concept that’s associated with neural networks, and so it also applies to Stable Diffusion checkpoints which are by design latent diffusion models.

How Does Pruning Actually Work?

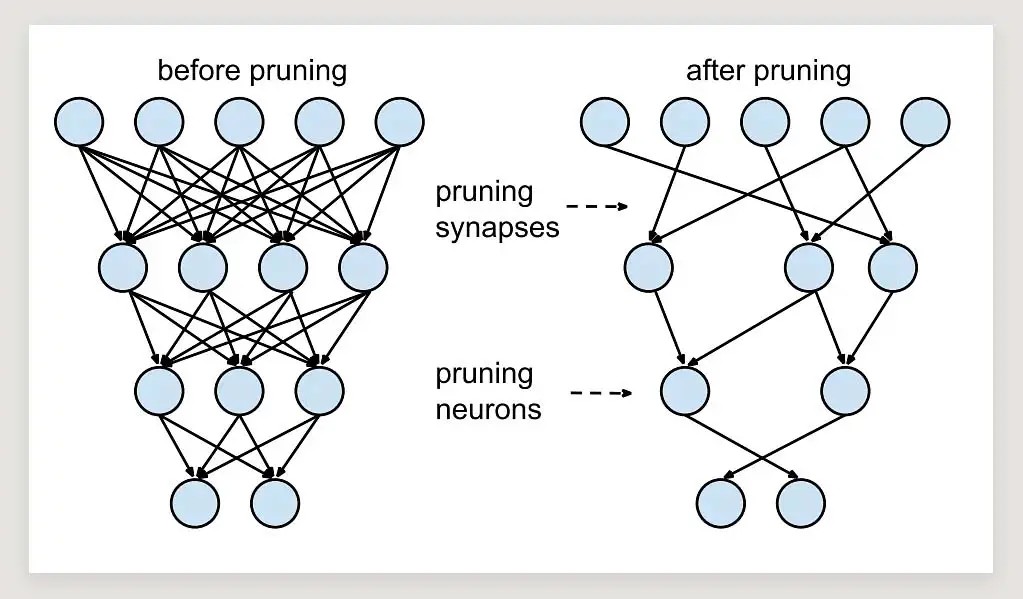

So, as we’ve just learned, model pruning is a technique for reducing the size of deep learning models. Imagine a neural network model with thousands and thousands of connections between its parts. Pruning involves removing many of these connections, but doing it in a way that doesn’t harm the network’s performance much.

Researchers have found that cutting down weights/parameters that are very close to zero doesn’t make the network much worse at its job (like recognizing or generating images), but it does make the network much smaller and faster. Saving much space with additional performance gains and without significant accuracy loss – isn’t that great?

Pruning in certain conditions can make the networks even about 10 times smaller while keeping them highly accurate. This process can be very useful when trying to make AI work well on devices with limited resources.

Did you know that the whole idea of pruning is actually based on what naturally happens in human brain, just like the digital neural networks themselves? The idea of pruning in artificial neural networks is inspired by the way the human brain naturally trims the number of its synapses through a gradual decay and elimination of axons and dendrites. This natural occurrence usually starts shortly after birth and continues until approximately the mid-20s, thus spanning from early childhood through the onset of puberty in many people.

You can learn much more about the whole concept of neural network pruning in this article over on Medium.

Does Pruning a Model Degrade It’s Quality?

Yes, but by a very, very small amount. You can find various comparisons online that show little, almost unnoticeable differences in details in images generated using pruned and unpruned Stable Diffusion models.

In this article by Jacob Gildenblat about pruning in deep learning you can see that pruning a neural network can yield even as little as less than one percent accuracy loss, all while shrinking a model almost four times and reducing the inference time by almost three times!

In general, there are no real downsides of using a pruned Stable Diffusion model for inference (generating images). Training a new Stable Diffusion checkpoint is another thing though. More on that in a short while!

Pruned vs. Unpruned Models In Stable Diffusion

So, let’s get back on track and see what are the differences between pruned and not pruned versions of models when it comes to Stable Diffusion.

When training a Stable Diffusion checkpoint you in most cases will end up with many model weights/parameters with their values close to zero, and yet not exactly equal zero. And so later on during inference, these very small weights end up being used in the image generation process without having significant impact on the generation output, yet still taking time to be processed.

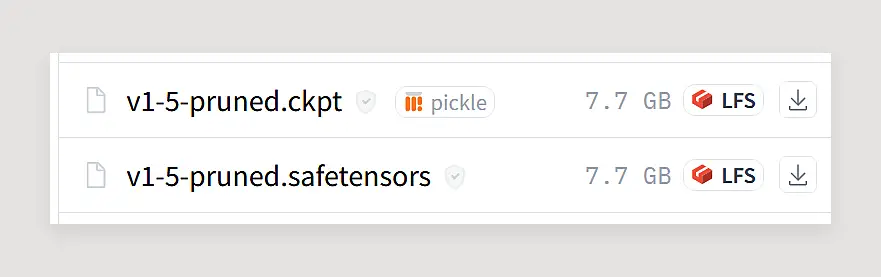

Pruning a Stable Diffusion model/checkpoint lets you automatically get rid of these less significant weights and bring them down to actual zeros, and with that shrink the model by quite a lot while also making the inference process faster by avoiding doing unnecessary computation.

With the decreased size of a pruned neural network model come quite a few advantages. Here are two of the most important ones:

- Smaller Model Size – Quite naturally, less data in the model translates to smaller model size. Pruned networks can weigh much less than unpruned ones. This is a great advantage if you’re hoarding lots of different Stable Diffusion checkpoints on your hard drive!

- Faster Inference – A model with less weights/parameters means less data being processed during inference. What this means for you, is faster image generation with pruned SD models.

As you can see, using pruned models for inference gives you a lot of advantages without any substantial quality loss in your generations. But what about model training? Can, or rather, should you use pruned models for training new Stable Diffusion checkpoints?

You should know this: A much more important difference is the difference between the .ckpt and .safetensors Stable Diffusion model formats. In fact, it’s a matter of security! You can learn more about this matter here: Ckpt vs. Safetensors In Stable Diffusion (Differences & Safety)

Should You Use a Pruned Model For Training?

The short answer is: no. While pruned models are great for inference and they give you many advantages over the much larger unpruned original models, when training a Stable Diffusion checkpoint you want to use a non-pruned base Stable Diffusion checkpoint for the process.

Why is that? Well, the main reason for this is that while all the weights close to zero that get removed during the pruning process don’t affect the generated images quality by a lot, these weights can actually matter when it comes to continuing the model training from a given checkpoint.

For further training, even the smallest pieces of data in the model in question can eventually become significant. When continuing the model training process, it’s best to use the model which gives you the largest amount of data you can use. When it comes to model parameters even the smallest values may end up changing and having large impact on the final training results. Keep that in mind!

Use regular, unpruned models for training when it comes to Stable Diffusion, and in any other ML context. This way you will be able to get the best possible results in the end.

How Do You Prune a Stable Diffusion Model?

Can you prune a Stable Diffusion model/checkpoint locally on your computer? Actually yes, and it’s rather simple. There are a few ways to do that.

To prune a Stable Diffusion model you can:

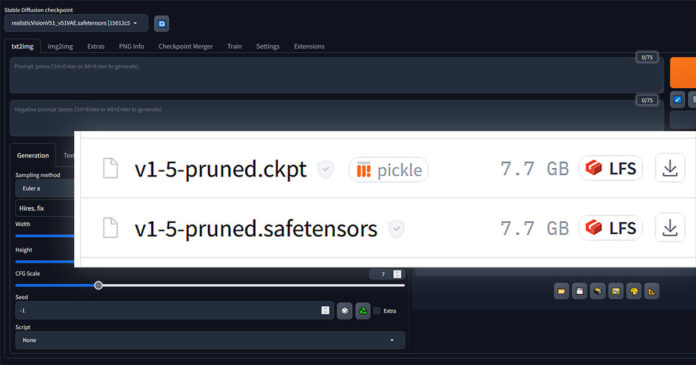

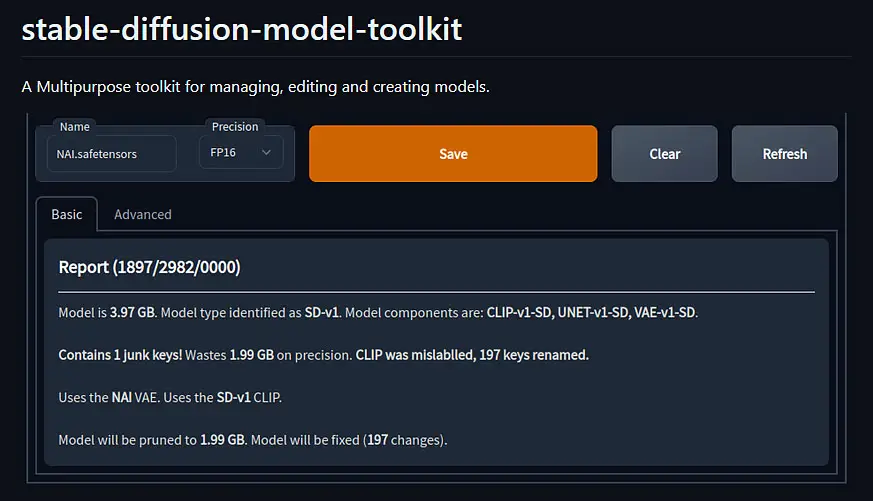

- Use the model toolkit extension for the Automatic1111 Stable Diffusion WebUI. You can install it from the WebUI itself.

- Make use of the SD WebUI Model Converter exteinsion by Akegarasu. This is another extension for the WebUI that lets you prune Stable Diffusion models.

- Utilize the Stable Diffusion Prune Python script by lopho. This external Python script allows you to prune models using a single command.

Now you know what pruning implies in deep learning and all the advantages of pruned models. Go and use that knowledge to your advantage on your journey with Stable Diffusion. Until next time!

Check out also: How To Use LyCORIS Models In Stable Diffusion – Quick Guide (+LoRA vs. LyCORIS)