Here are the absolute best uncensored models I’ve found and personally tested both for AI RP/ERP, chatting, coding and other LLM related tasks that can be done locally on your own PC. Of course it goes without saying that these will work with any other large language model software, however I’ve ran all of them using the Oobabooga WebUI and KoboldCpp/SillyTavern. Let’s roll!

Check out also: How To Set Up The OobaBooga TextGen WebUI – Full Tutorial

7B Models? – What Does The “B” Stand For?

The “B” you probably keep on seeing in various large language model names is a short for “billion” and it refers to the amount of parameters the model has. In this case it means that a model has 7 billion parameters.

In general, the more parameters an LLM has, the better they can perform in various more complex tasks (because of their greater capacity for learning), however they are also larger in size, and depending on their precision level, optimization methods at play and a few other factors, do require more VRAM to load up and use.

This is a part of the larger LLM naming convention in which the model names are created from a few connected parts most commonly including: the model family, model’s current version/iteration, parameter size and quantization format.

Although not all model names follow this convention, it is basically agreed upon and widely used when publishing trained large language models online.

Need a GPU upgrade to run larger, higher quality models with OobaBooga? – Here is my curated GPU list for LLMs – Best GPUs For Local LLMs This Year (My Top Picks!)

What Were My Criteria? – Why These Models

This list goes with 7B models, as these are among the most used by people without access to larger amounts of video memory, and in many cases can even be loaded even on older 8GB VRAM GPUs. Moreover, on graphics cards with more video memory on board, they can be used to make use of the larger context windows and achieve longer conversations.

All of the models listed were used by me and tested both during casual conversations, roleplay scenarios and simple logic/language processing/text formatting tasks, and their performance turned out to be reasonably good.

Remember that ideally you want to test out a few different models to see which model’s response style fits your RP/chatting style the best. The outputs for the same input prompt may largely vary between different models, and the best way to see

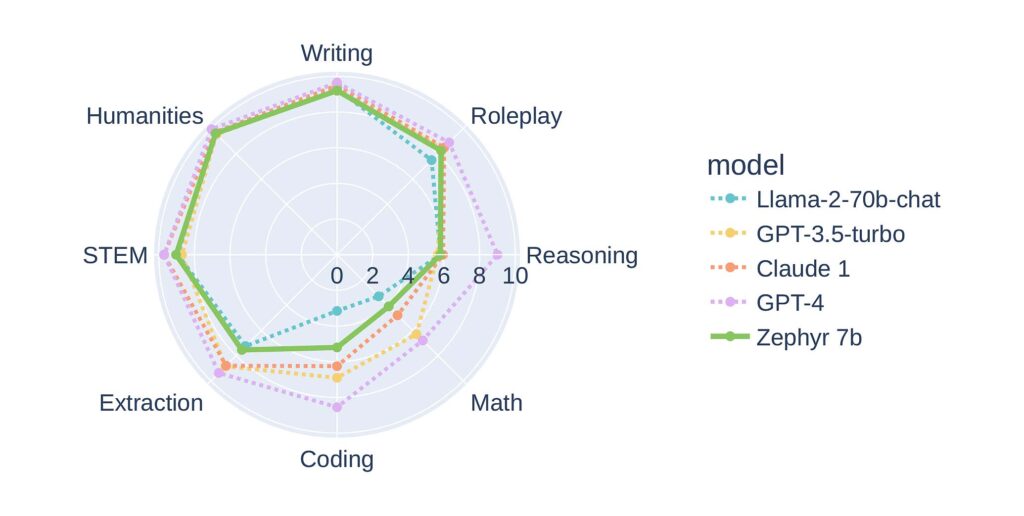

If you want direct benchmark tests, some of these models do feature benchmark scores on their main Huggingface repository pages, so feel free to check that out!

Is The Output Quality of 7B Models Any Good?

As you might probably know, larger doesn’t always necessarily mean better. But still, often it does. One could argue that a smaller model trained on a better dataset could perform better than a large model trained on half-garbage data, and probably would be right. With all that said however, it’s hard for the smaller, lower precision models 7B to reach the levels of performance of LLMs like the GPT-4o from Open AI which is said to feature 220 billion parameters total (which would probably be denoted as 220B).

The reality however is that for less complex tasks like roleplaying, casual conversations, simple text comprehension tasks, writing simple algorithms and solving general knowledge tests, the smaller 7B models can be surprisingly efficient and give you more than satisfying outputs with the right configuration. So let’s get to the list, there are quite a few models to choose from here!

1. Dolphin-2.8-Mistral-7B

Dolphin-2.8-Mistral-7B is an uncensored Dolphin model based on Mistral with alignment/bias data removed from the training set, which is designed for coding tasks, but really excels at simulating natural conversations and can easily go beyond that, for instance acting as a writing assistant.

I was really surprised how good this model does with various roleplaying tasks and character-based chat sessions considering it was originally meant for solving simple programming tasks. For now, it is among my 3 go-to models, and most of the time I load it up first when playing around with Oobabooga. Overall, one of the best hidden gems I came across. You should definitely try it out!

2. Wizard-Vicuna-7B-Uncensored

Wizard-Vicuna-7B-Uncensored is a model trained using a subset of the LaMA-7B dataset with any responses which could contain moralizing or alignment of any kind were removed to avoid any unwanted “guidance” from the model when generating responses, which would otherwise be subject to the classic on-the-go judgement from the AI during your chat.

This unfiltered model is commonly recommended for roleplay use, and being honest it’s up there when it comes to the output quality. Together with the OpenHermes-2.5-Mistral-7B and Dolphin it was among my 3 most used 7B models for a pretty long time. All in all, another one really worth testing out.

3. Yarn-Mistral-7b-128k

Yarn-Mistral-7b-128k. There isn’t much to say about this one, other than it’s a really solid 7B model which can be used both for casual AI character roleplay and some more serious purposes. It’s a direct extension of the base Mistral-7B-v0.1 model, and it supports a rather large 128k token context window.

Good for RP, great for most of the tasks you throw at it. Works well with guided character data and is in general a great choice when it comes to higher quality 7B models out there. Not censored, but also not further mixed with more explicit datasets.

4. OpenHermes-2.5-Mistral-7B

The OpenHermes-2.5-Mistral-7B model is one of the most popular fine-tunes of the original Mistral model. Being another flavor of Mistral, it also inherits its main features. This model is trained on additional coding datasets, however it also passes various non-code benchmarks with great scores. While being one of the older options, it still performs pretty well in RP contexts!

If you didn’t really like the base Mistral model or the YaRN version, be sure to test it out – the inputs from this one are significantly different. Now let us continue. Beware: more “heavily uncensored” models ahead!

5. Pygmalion-2-7b

Pygmalion-2-7b is based on the Llama-2-7B model from Meta AI. It is meant to be a model designed and fine-tuned specifically for roleplaying, casual conversations, storytelling and writing assistant use. And it does its job really well.

Trained on a large amount of RP convos, short stories and conversation data it is specifically prepared for using with character data. Its first version was one of the most popular open-source large language models made for private roleplay sessions. It is also fully uncensored. Not much more to say here!

6. Starling-LM-7B-alpha

Starling-LM-7B-alpha, fine-tuned from the base Openchat 3.5 model which was originally based on the first version of the Mistral-7B is also one of the most popular picks for local LLM use. In many benchmarking categories its scores are surprisingly close to GPT-4, and it is generally a very solid model.

While it isn’t my first pick for AI roleplay, it’s a solid model and can be rather easily steered towards a natural simulated conversation. Expect quality outputs in the style of other Mistral-based models with the right guidance. Yet another great pick.

7. Toppy-M-7B

The Toppy-M-7B is a model with a rather telling name often recommended when it comes to ERP, and for a good reason. Based on the uncensored merge of a few different models and LoRAs, this one is definitely worth trying out, especially if you’re into generating some more spicy roleplay scenarios.

That’s pretty much it. Definitely not my first pick, but I can see how many of you may be interested in it. As a fun challenge you can always try and force it to do some light coding for you. Test away!

8. Loyal-Toppy-Bruins-Maid-7B-DARE

Loyal-Toppy-Bruins-Maid-7B-DARE is the last model on this list. Yes, this name is also real. And yes, it is a model trained using the previously mentioned Starling-LM-7B-alpha, the Toppy-M-7B and a few different models as a base. It’s optimized for roleplaying but can also perform many other tasks with satisfying output quality given the right prompt.

This one is quite an interesting merge, and it can give surprisingly high quality results considering its funky name and merge source models training material. And with that, I’m out!

Check out also: Character.ai Offline & Without Filter? – Free And Local Alternatives