With the rapid development of new software for large language models self-hosting and local LLM inference, support for AMD graphics cards is becoming increasingly widespread. Here is a list of the most popular LLM software compatible with both NVIDIA and AMD GPUs, along with additional information you might find useful if you’re just starting out. I will do my best to update this list frequently, so that it continues to be a useful and reliable resource for as long as possible. Last article update: October 2025.

If you’re looking for the very best AMD graphics cards you can get for local AI inference using the LLM software presented on this list, I’ve already put together a neat resource for picking up the very best GPU model for your needs. Feel free to take a look!

Also, if you want to get into AI image generation too, you can check out my full list and comparison of the most popular Stable Diffusion WebUI software here.

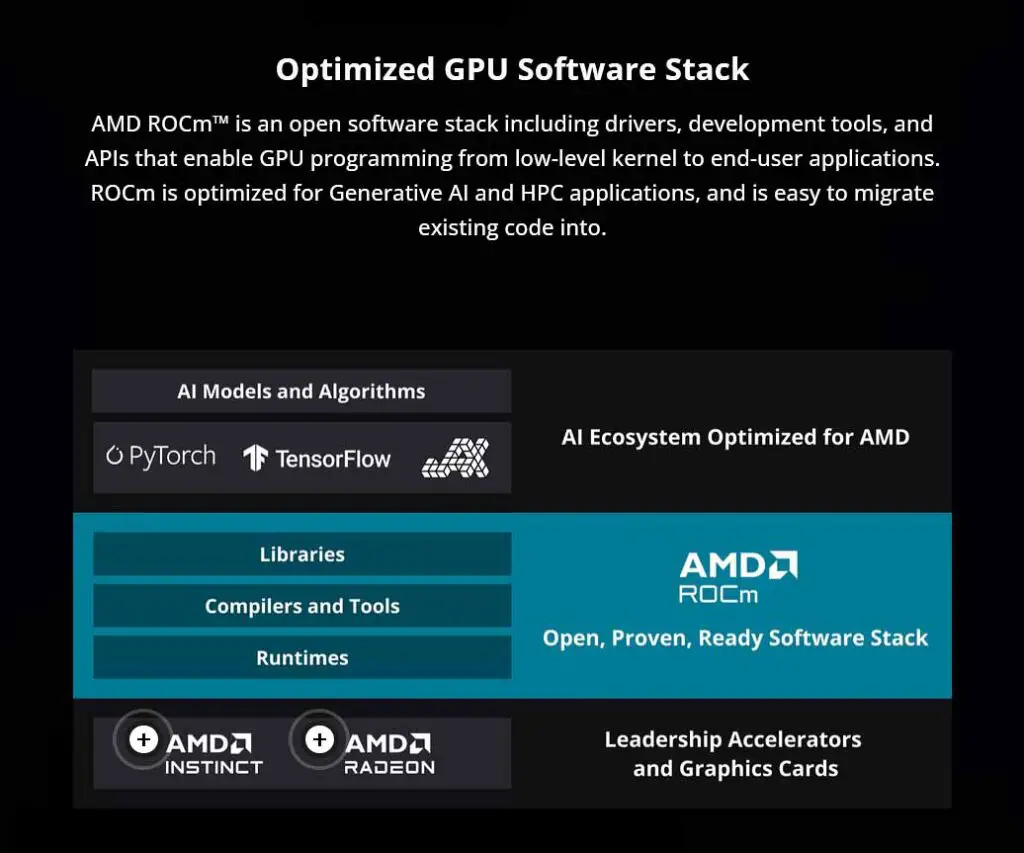

Base Windows/Linux Requirements For AMD GPU Compatibility – ROCm

The AMD ROCm software stack, which is still in active development, is one of the things you’ll need if you want to efficiently run your large language models using a new AMD graphics card.

One of the key components for leveraging AMD graphics cards most efficiently (especially the newest models), regardless of your operating system, is ROCm. It’s an open-source software stack for GPU-accelerated computing, and it work in a similar way to the closed-source CUDA from NVIDIA.

As of late 2025, AMD is still actively working to make ROCm a true cross-platform solution. While historically ROCm releases for Windows have lagged behind their Linux counterparts, recent developments seem to be slowly closing this gap. With the introduction of the latest version of ROCm, support has been extended to Windows and an even wider range of Radeon GPUs, allowing developers to run AI workloads directly on AMD hardware in a Windows environment.

For now, full ROCm support is still most robust for the newest AMD graphics cards. While on Linux you can often enable support for officially unsupported AMD GPUs (like some in the Radeon RX 6000 series) by modifying the HSA_OVERRIDE_GFX_VERSION environment variable, this is not as straightforward on Windows.

To install ROCm on Windows, you’ll need to install the AMD HIP SDK. On Linux, you can use the official install scripts available on the AMD ROCm website.

If installing or using ROCm isn’t an option, most of the software on this list also supports the Vulkan graphics API or the OpenCL framework. While generally slower than ROCm, these will still allow you to use your AMD GPU for model inference.

Unsupported AMD GPUs – HSA_OVERRIDE_GFX_VERSION

As mentioned, if you have an older AMD GPU that doesn’t officially support ROCm, you might still be able to benefit from ROCm GPU acceleration on Linux. Modifying the HSA_OVERRIDE_GFX_VERSION parameter can override the Graphics Core Next (GCN) version that the ROCm libraries utilize, which can enable certain features on unsupported hardware.

Again, keep in mind that for now, modifying this variable is primarily a solution for Linux systems.

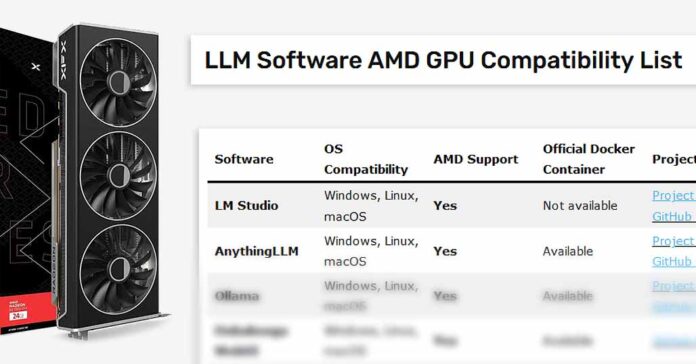

LLM Software Full Compatibility List – NVIDIA & AMD GPUs

Here is the full list of the most popular local LLM software that currently works with both NVIDIA and AMD GPUs. Note that some programs might require a bit of tinkering to get working with your AMD graphics card. Refer to the table below and use the project links to check out further details on the official websites and repositories for the listed software. All of the listed programs support ROCm and Vulkan, unless stated otherwise.

If you’re reading this on a mobile device, scroll right on the table to see all the columns.

| Software | GUI/WebUI Available | OS Compatibility | AMD Support | Official Docker Container | Project Links |

|---|---|---|---|---|---|

| LM Studio | Yes | Windows, Linux, macOS | Yes | Not available |

Project Site GitHub Page |

| Gpt4All | Yes | Windows, Linux, macOS | Yes | Not available |

Project Site GitHub Page |

| KoboldCPP | Yes | Windows, Linux, macOS | Yes | Not Available |

GitHub Page (Main) GitHub Page (ROCm) |

| MLC LLM | Yes | Windows, Linux, macOS, iOS, Android* | Yes | Not Available |

Project Site GitHub Page |

| Llama.cpp | No | Windows, Linux, macOS | Yes | Available | GitHub Page |

| Ollama | No | Windows, Linux, macOS | Yes | Available |

Project Site GitHub Page |

| AnythingLLM | Yes | Windows, Linux, macOS | Yes | Available |

Project Site GitHub Page |

| Oobabooga | Yes | Windows, Linux, macOS | Yes | Available | GitHub Page |

| Jan | Yes | Windows, Linux, macOS | Yes, only via Vulkan | Available |

Project Site GitHub Page |

| Llamafile(s) | No | Windows, Linux, macOS | Yes | Available** |

Project Documentation GitHub Page |

*Android and iOS are listed only if the software features an official Android/iOS application. Some of the programs here (for instance Llama.cpp and Ollama), can, with some additional effort be run on Android devices, for instance by utilizing Termux.

**You can easily containerize Llamafiles using the following method.

If you want to know which one of these you should choose if you have no prior experience with this kind of software, or are simply curious about the installation process for each and want more details, the next section of this article should help with that. Aside from that, this is another resource that may just interest you: Beginner’s Guide To Local LLMs – How To Get Started

So, Which One of These Is The Easiest to Set Up & Install?

Below is a quick summary of all the software from the table above with additional comments, mainly focusing on the simplicity of the installation process. This information will be updated alongside the table above.

- LM Studio – Extremely easy to set up for both AMD and NVIDIA users, making it one of the best options for beginners. It features one-click installers for Windows, MacOS, and Linux. Note that unlike the other software on this list, it is closed source.

- Gpt4All – Similar to LM Studio, it offers simple installers for Windows, MacOS, and Linux. It also has broad GPU support through Vulkan.

- KoboldCPP – Alongside its ROCm compatible fork, it has a one-click installer available for Windows and a simple installation script for Linux. I’ve also prepared a full setup guide for it here: Quickest SillyTavern x KoboldCpp Starter Guide (Local AI Characters RP)

- MLC LLM – Can be easily installed on your system using Python pip with a single console command on Windows, iOS, and Linux. Installing it in an isolated conda virtual environment is highly recommended. Native Android and iOS mobile apps are a strong point for this software.

- Llama.cpp – On Windows, pre-compiled binary files are available. On Linux, you can install it using brew, flox, or nix. Recent updates have improved support for a wider range of AMD GPUs.

- Ollama – Simple installers are available for Windows and MacOS, with an installation script for Linux. It has an official list of supported AMD cards.

- AnythingLLM – For Windows and MacOS, AnythingLLM has a one-click installer that should automatically download the necessary dependencies for GPU acceleration. A simple installation script is available for Linux.

- Oobabooga WebUI – On Windows, it requires a few extra steps to be compatible with AMD graphics cards. On Linux, ensuring AMD compatibility requires manually installing some additional software packages.

- Jan – Installers are available for Windows, MacOS, and Linux. Vulkan acceleration support for AMD GPUs needs to be enabled in the software settings.

- Llamafile – Llamafiles are designed to be used right away after downloading and typically do not require any additional setup.

Are NVIDIA Cards any Better When It Comes To Local LLMs?

The reality is that while AMD is catching up in AI software compatibility, particularly due to the slower development of ROCm compared to NVIDIA’s CUDA technology, many of their newer GPU models are more than capable for most tasks related to hosting large language models locally.

When using AMD graphics cards with compatible LLM software, you might occasionally encounter a more complex setup process and slightly slower inference speeds. However, these issues are likely to become less significant as the ROCm framework continues to evolve.

Currently, AMD graphics cards (and lately also the Intel Arc GPUs) are often more affordable than those from NVIDIA, especially when considering the price per 1GB of VRAM, a crucial factor for hosting larger, higher-quality language models.

Taking all this into account, I can safely say this: AMD cards are becoming increasingly reliable for handling various AI-related tasks. Their lower price point, even for the latest models, makes them a worthwhile option for many users.

If you can accept the possibility of a more involved software configuration in some rare cases, as well as potential software incompatibility with older cards, then sticking with or choosing Radeon cards for your new LLM hosting setup can be a very reasonable idea. Let’s keep our fingers crossed for AMD in the near future.

You might also like: Intel Arc B580 & A770 For Local AI Software – A Closer Look